By Security News

July 8, 2024

Overview

The SonicWall Capture Labs threat research team became aware of an XML External Entity Reference vulnerability affecting Adobe Commerce and Magento Open Source. It is identified as CVE-2024-34102 and given a critical CVSSv3 score of 9.8. Labeled as an Improper Restriction of XML External Entity Reference (‘XXE’) vulnerability and categorized as CWE-611, this vulnerability allows an attacker unauthorized access to private files, such as those containing passwords. Successful exploitation could lead to arbitrary code execution, security feature bypass, and privilege escalation.

A proof of concept is publicly available on GitHub. Adobe Commerce versions 2.4.7, 2.4.6-p5, 2.4.5-p7, 2.4.4-p8, and earlier and Magento Open-Source versions 2.4.7, 2.4.6-p5, 2.4.5-p7, 2.4.4-p8, and earlier are vulnerable. Although Magento Open Source is popular mainly for dev environments, according to Shodan and FOFA, up to 50k exposed Adobe Commerce with Magento template are running.

Technical Overview

Magento (Adobe Commerce) is a built-in PHP platform that helps programmers create eCommerce websites and sell online. It is an HTTP PHP server application. Such applications usually have two global entry points: the User Interface and the API. Magento uses REST API, GraphQL, and SOAP.

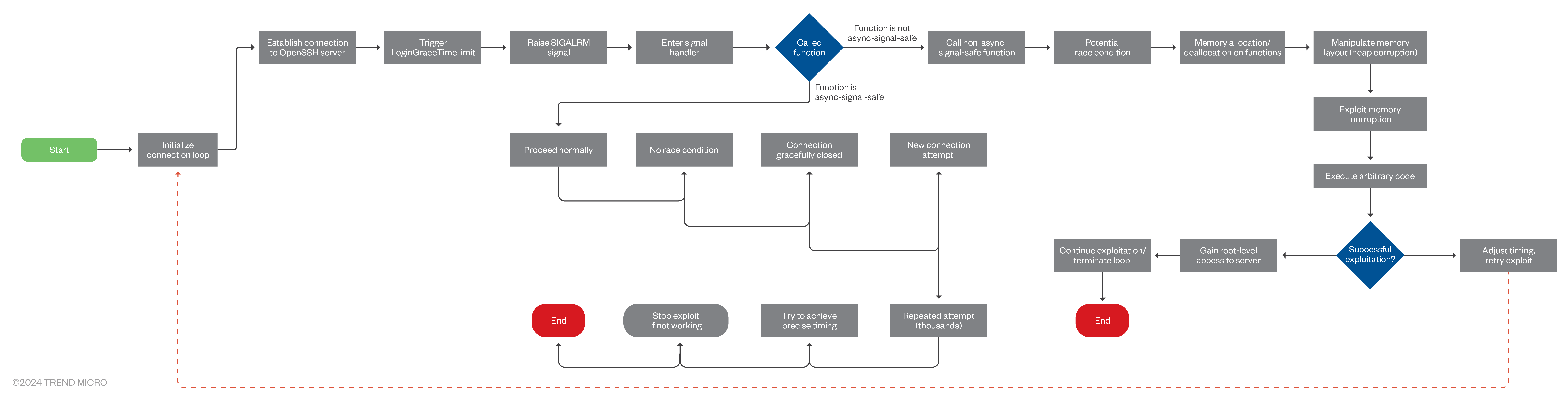

Attackers can leverage this vulnerability to gain unauthorized admin access to REST API, GraphQL API, or SOAP API, leading to the disclosure of confidential data, denial of service, server-side request forgery (SSRF), port scanning from the perspective of the machine where the parser is located, and complete compromise of affected systems. This vulnerability poses a significant risk due to its ability to exfiltrate sensitive files, such as app/etc/env.php, containing cryptographic keys used for authentication, as shown in Figure 1. This key is generated during Magento 2 installation process. Unauthenticated actors can utilize this key to forge administrator tokens and manipulate Magento’s APIs as privileged users.

Figure 1: app/etc/env.php

The vulnerability is due to improper handling of nested deserialization in Adobe Commerce and Magento. This allows attackers to exploit XML External Entities (XXE) during deserialization, potentially allowing remote code execution. Unauthorized attackers can craft malicious JSON payloads that represent objects with unintended properties or behaviors when deserialized by the application.

Triggering the Vulnerability

XML External Entities (XXE) attack technique takes advantage of XML’s feature of dynamically building documents during processing. An XML message can provide data explicitly or point to a URI where the data exists. In the attack technique, external entities may replace the entity value with malicious data, alternate referrals, or compromise the security of the data the server/XML application has access to.

In the example below, the attacker takes advantage of an XML Parser’s local server access privileges to compromise local data:

- The sample application expects XML input with a parameter called “username.” This parameter is later embedded in the application’s output.

- The application typically invokes an XML parser to parse the XML input.

- The XML parser expands the entity “test” into its full text from the entity definition provided in the URL. Here, the actual attack takes place.

- The application embeds the input (parameter “username,” which contains the file) in the web service response.

- The web service echoes back the data.

Attackers may also use External Entities to have the web services server download malicious code or content to the server for use in secondary or follow-on attacks. Other examples wherein sensitive files can be disclosed are shown in Figure 2.

Figure 2: Disclosing targeted files.

Exploiting the Vulnerability

A crafted POST request to a vulnerable Adobe instance with an enabled Magento template is the necessary and sufficient condition to exploit the issue. An attacker only needs to be able to access the instance remotely, which could be over the Internet or a local network. A working PoC with a crafted POST query aids in exploiting this vulnerability. Figure 4 shows a demonstration of exploitation leveraging the publicly available PoC.

Exploiting CVE-2024-34102, steps are enumerated below, which will exfiltrate the contents of the system’s password file from the target server.

- Create a DTD file (dtd.xml) on the attacker’s machine. This file includes entities that will read and encode the system’s password file, then send it to your endpoint.

- Host the dtd.xml file on the attacker’s machine, accessible via HTTP on a random port.

- Send the malicious payload via a sample curl request to the vulnerable Magento instance, as shown in Figure 3. The payload includes a specially crafted XML payload referencing the DTD file hosted on the attacker’s machine.

- The XML parser in Magento will process the DTD file, triggering the exfiltration of the system’s password file as shown in Figure 4.

- Lastly, observe your endpoint to capture and decode the exfiltrated data.

Figure 3: CVE-2024-34102 attack request

00:00

00:15

Figure 4: CVE-2024-34102 Exploitation

Out of the 50k exposed Magento instances in the wild, multiple events were observed wherein attackers leveraged this vulnerability, as only 25% of instances have been updated since the vulnerability was exploited in the wild. According to Sansec analysis, CVE-2024-34102 can be chained with other vulnerabilities, such as the PHP filter chains exploit (CVE-2024-2961), leading to remote code execution (RCE).

SonicWall Protections

To ensure SonicWall customers are prepared for any exploitation that may occur due to this vulnerability, the following signatures have been released:

- IPS: 4462 – Adobe Commerce XXE Injection

Remediation Recommendations

Considering the severe consequences of this vulnerability and the trend of nefarious activists trying to leverage the exploit in the wild, users are strongly encouraged to upgrade their instances, according to Adobe advisory, to address the vulnerability.

Relevant Links

Source :

https://blog.sonicwall.com/en-us/2024/07/adobe-commerce-unauthorized-xxe-vulnerability/