By: Greg Young – Trendmicro

August 03, 2023

Read time: 4 min (1014 words)

The US Securities and Exchange Commission (SEC) recently adopted rules regarding mandatory cybersecurity disclosure. Explore what this announcement means for you and your organization.

On July 26, 2023, the US Securities and Exchange Commission (SEC) adopted rules regarding mandatory cybersecurity disclosure. What does this mean for you and your organization? As I understand them, here are the major takeaways that cybersecurity and business leaders need to know:

Who does this apply to?

The rules announced apply only to registrants of the SEC i.e., companies filing documents with the US SEC. Not surprisingly, this isn’t limited to attacks on assets located within the US, so incidents concerning SEC registrant companies’ assets in other countries are in scope. This scope also, not surprisingly, does not include the government, companies not subject to SEC reporting (i.e., privately held companies), and other organizations.

Breach notification for these others will be the subject of separate compliance regimes, which will hopefully, at some point in time, be harmonized and/or unified to some degree with the SEC reporting.

Advice for security leaders: be aware that these new rules could require “double reporting,” such as for publicly traded critical infrastructure companies. Having multiple compliance regimes, however, is not new for cybersecurity.

What are the general disclosure requirements?

Some pundits have said “four days after an incident” but that’s not quite correct. The SEC says that “material breaches” must be reported “four business days after a registrant determines that a cybersecurity incident is material.”

We’ve hit the first squishy bit: materiality. Directing companies to disclose material events shouldn’t be necessary before there’s a mixed record of companies making materiality for public company operation. But what kind of cybersecurity incident would be likely to be important to a reasonable investor?

We’ve seen giant breaches that paradoxically did not move stock prices, and minor breaches that did the opposite. I’m clearly on the side of compliance and disclosure, but I recognize it is a gray area. Recently we saw some companies that had the MOVEit vulnerability exploited but had no data loss. Should they report? But in some cases, their response to the vulnerability was in the millions: how about then? I expect and hope there will be further guidance.

Advice for security leaders: monitor the breach investigation and monitor the analysis of materiality. Security leaders won’t often make that call but should give guidance and continuous updates to the CxO who are responsible.

The second squishy bit is that the requirement is the reporting should be made four days after determining the incident is material. So not four days after the incident, but after the materiality determination. I understand why it was structured this way, as a small indicator of compromise must be followed up before understanding the scope and nature of a breach, including whether a breach has occurred at all. But this does give a window to some of the foot-dragging for disclosure we’ve unfortunately seen, including product companies with vulnerabilities.

Advice for security leaders: make management aware of the four-day reporting requirement and monitor the clock once the material line is crossed or identified.

Are there extensions?

There are, but not because you need more time. Instead “The disclosure may be delayed if the United States Attorney General determines that immediate disclosure would pose a substantial risk to national security or public safety and notifies the Commission of such determination in writing.” Note that it specifically states that the Attorney General (AG) makes that determination, and the AG communicates this to the SEC. There could be some delegation of this authority within the Department of Justice in the future, but today it is the AG.

How does it compare to other countries and compliance regimes?

Breach and incident reporting and disclosure is not new, and the concept of reporting material events is already commonplace around the world. GDPR breach reporting is 72 hours, HHS HIPAA requires notice not later than 60 days and 90 days to individuals affected, and the UK Financial Conduct Authority (FCA) has breach reporting requirements. Canada has draft legislation in Bill C-26 that looks at mandatory reporting through the lens of critical industries, which includes verticals such as banking and telecoms but not public companies. Many of the world’s financial oversight bodies do not require breach notification for public companies in the exchanges they are responsible for.

Advice to security leaders: consider the new SEC rules as clarification and amplification of existing reporting requirements for material events rather than a new regime or something that is harsher or different to other geographies.

Is breach reporting the only new rule?

No, I’ve only focused on incident reporting in this post. There’s a few more. The two most noteworthy ones are:

- Regulation S-K Item 106, requiring registrants to “describe their processes, if any, for assessing, identifying, and managing material risks from cybersecurity threats, as well as the material effects or reasonably likely material effects of risks from cybersecurity threats and previous cybersecurity incidents.”

- Also specified is that annual 10-Ks “describe the board of directors’ oversight of risks from cybersecurity threats and management’s role and expertise in assessing and managing material risks from cybersecurity threats.”

Bottom line

SEC mandatory reporting for material cybersecurity events was already a requirement under the general reporting requirements, however the timelines and nature of the reporting are getting real and have a ticking four-day timer on them.

Stepping back from the rules, the importance of visibility and continuous monitoring are the real takeaways. Time to detection can’t be at the speed of your least experienced analyst. Platform means unified visibility rather than a wall of consoles. Finding and stopping breaches means internal visibility must include a rich array of telemetry, and that it be continuously monitored.

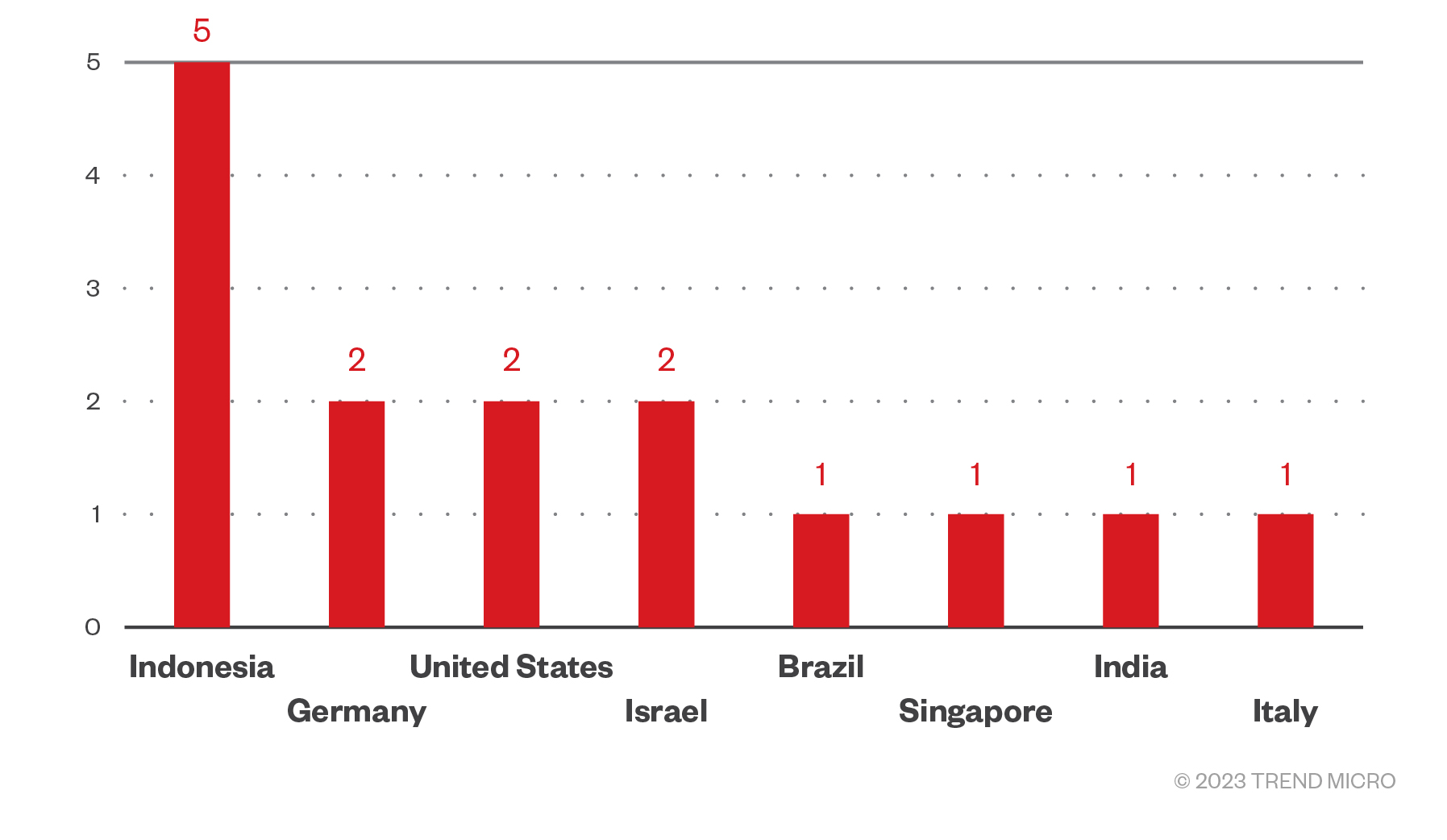

Many SEC registrants have operations outside the US, and that means visibility needs to include threat intelligence that is localized to other geographies. These new SEC rules show more than ever that that cyber risk is business risk.

To learn more about cyber risk management, check out the following resources:

- Trend Vision One™ – A Cybersecurity Consolidation Path

- Decrypting Cyber Risk Quantification

- Attack Surface Management Strategies

Source :

https://www.trendmicro.com/en_us/research/23/h/sec-cybersecurity-rules-2023.html