Do you want to know what’s new in Google Analytics 4? How is GA4 different from Universal Analytics?

There’s a lot that’s changed in the new Google Analytics 4 platform including the navigation. Google has added new features and removed a number of reports you’re familiar with. And that means we’ll need to relearn the platform.

In this guide, we’ll detail the differences between Google Analytics 4 (GA4) vs. Universal Analytics (UA) so that you’re prepared to make the switch.

If you haven’t already switched to Google Analytics 4, we have an easy step-by-step guide you can follow: How to Set Up Google Analytics 4 in WordPress.

What’s New Only in Google Analytics 4?

In this section, we’re detailing the things that are new in GA4 that aren’t present in Universal Analytics at all. A little later, we’ll go into depth about all the changes you need to know about.

- Creating and Editing Events: GA4 brings about a revolutionary change in the way you track events. You can create a custom event and modify events right inside your GA4 property. This isn’t possible with Universal Analytics unless you write code to create a custom event.

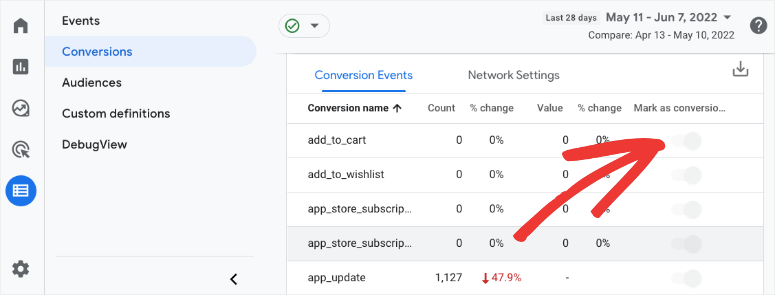

- Conversion Events: Conversion goals are being replaced with conversion events. You can simply mark or unmark an event to start tracking it as a conversion. There’s an easy toggle switch to do this. GA4 even lets you create conversion events ahead of time before the event takes place.

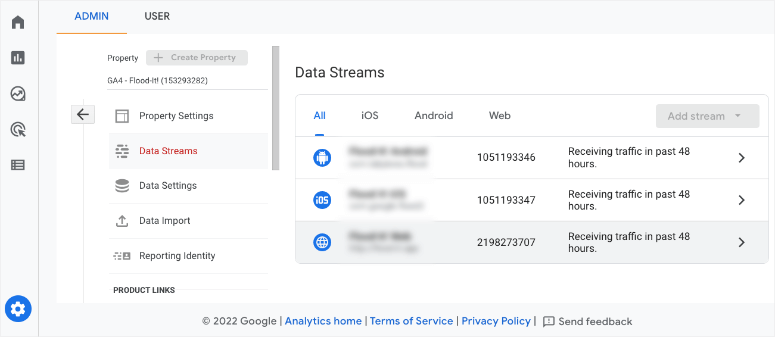

- Data Streams: UA lets you connect your website’s URL to a view. These views let you filter data. So for instance, you can create a filter in a UA view to exclude certain IP addresses from reports. GA4 uses data streams instead of views.

- Data filters: Now you can add data filters to include or exclude traffic internal and developer traffic from your GA4 reports.

- Google Analytics Intelligence: You can delete search queries from your search history to fine-tune your recommendations.

- Explorations and Templates: There’s a new Explore item in the menu that takes you to the Explorations page and Template gallery. Explorations give you a deeper understanding of your data. And there are report templates that you can use.

- Debug View: There’s a built-in visual debugging tool which is awesome news for developers and business owners. With this mode, you can get a real-time view of events displayed on a vertical timeline graph. You can see events for the past 30 minutes as well as the past 60 seconds.

- BigQuery linking: You can now link your GA4 account with your BigQuery account. This will let you run business intelligence tasks on your analytics property using BigQuery tools.

While this is what’s unique to GA4, there are a lot more changes than this. But first, let’s take a look at what’s gone from the Universal Analytics platform that we’re all familiar with.

What’s Missing in Google Analytics 4?

Google Analytics 4 has done away with some of the old concepts. These include:

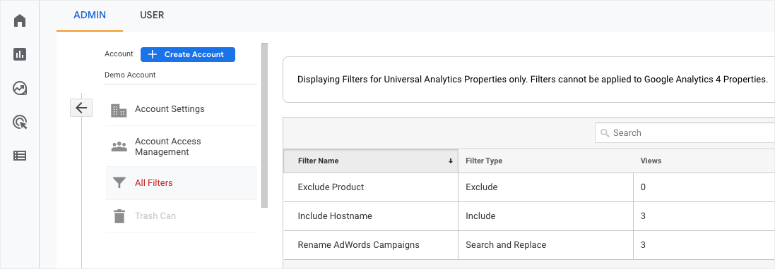

- Views and Filters: As we mentioned, GA4 is not using Data Streams and we explain this in depth a bit later. So you won’t be able to create a view and related filters. Once you convert your UA property to GA4, you’ll be able to access a read-only list of UA filters under Admin > Account > All Filters.

- Customization (menu): UA properties have a customization menu for options to create dashboards, create custom reports, save existing reports, and create custom alerts. Below are the UA customization options, along with their GA4 equivalent.

- Dashboards: At the time of writing this, there isn’t a way to create a custom GA4 dashboard.

- Custom reports: GA4 has the Explorations page instead where you can create custom reports.

- Saved reports: When you create a report in Explorations, it is automatically saved for you.

- Custom alerts: Inside custom Insights, which is a new feature in GA4, you can set custom alerts.

- Google Search Console linking: There isn’t a way to link Google Search Console with a GA4 property at the time of writing.

- Bounce rate: One of the most tracked metrics – the bounce rate – is gone. It’s likely that this has been replaced with Engagement Metrics.

- Conversion Goals: In UA, you could create conversion goals under Views. But since views are gone, so are conversion goals. However, you can create conversion events to essentially track the same thing.

Now that you know what’s new and what’s missing in GA4, we’ll take you through an in-depth tour of the new GA4 platform.

Google Analytics 4 vs Universal Analytics

Below, we’ll be covering the main differences between GA4 and UA. We’ve created this table of contents for you to easily navigate the comparison guide:

- New Mobile Analytics

- Easy User ID Tracking

- Navigational Menu

- Measurement ID vs Tracking ID

- Data Streams vs Views

- Events vs. Hit Types

- No Bounce Rate

- No Custom Reports

Feel free to use the quick links to skip ahead to the section that interests you the most.

New Mobile Analytics

A major difference between GA4 and UA is that the new GA4 platform will also support mobile app analytics.

In fact, it was originally called “Mobile + Web”.

UA only tracked web analytics so it was difficult for businesses with apps to get an accurate outlook on their performance and digital marketing efforts.

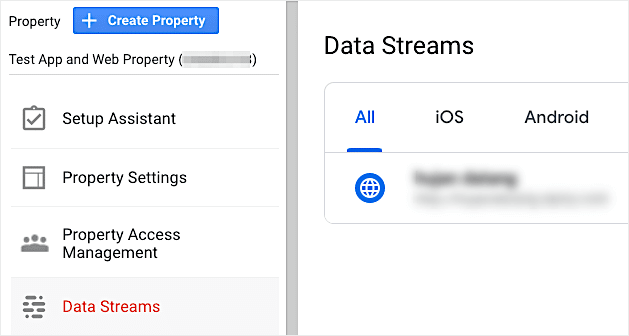

Now with GA4 data model, you’ll be able to track both your website and app. You can set up a data stream for Android and iOS.

There’s also added functionality to create custom campaigns to collect information about which mediums/referrals are sending you the most traffic. This will show you where your campaigns get the most traction so that you can optimize your strategies in the future.

Easy User ID Tracking

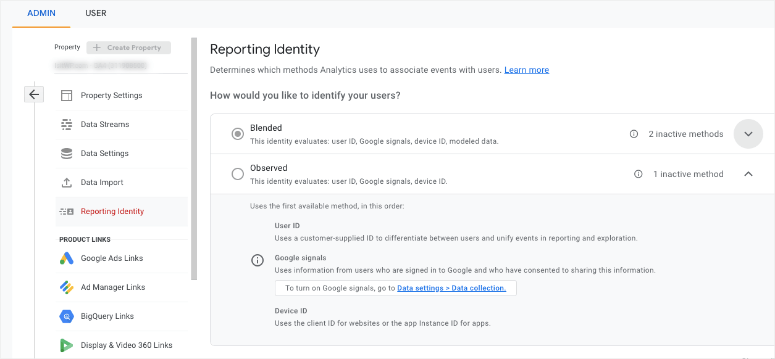

Turning on user ID tracking in UA was quite a task. But that’s all been simplified in GA4 with the new measurement model. You simply need to navigate to Admin » Property Settings » Reporting Identity tab.

You can choose between Blended and Observed mode. Select the one you want and save your changes. That’s it.

New Navigational Menu

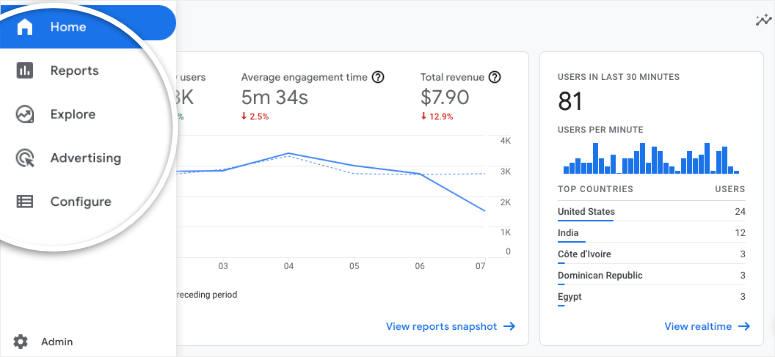

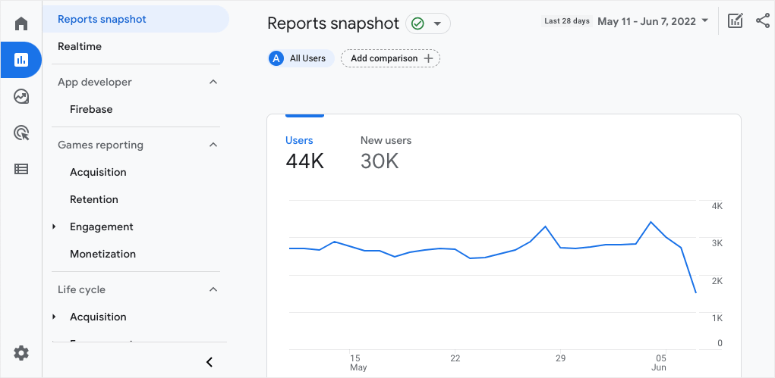

In GA4, the reporting interface remains familiar and the navigation menu is still on the left! That keeps things familiar but there are quite a few menu items that have changed.

First, there are only 4 high-level menu items right now. Google may add more as the platform is further developed.

Next, each menu item has a collapsed view. You can expand each item by clicking on it.

Now when you click on the submenu items, it will expand the menu to reveal more sub menus.

In GA4, you’ll see familiar menu items you use for SEO and other purposes but in different locations. Here are the notable changes:

- Realtime is under Reports

- Audience(s) is under Configure

- Acquisition is under Reports » Life cycle

- Conversions is under Configure

GA4 also comes with completely new menu items as listed below:

- Reports snapshot

- Engagement

- Monetization

- Retention

- Library

- Custom definitions

- DebugView

Measurement ID vs Tracking ID

Universal Analytics uses a Tracking ID that has a capital UA, a hyphen, a 7-digit tracking code followed by another hyphen, and a number. Like this: UA-1234567-1.

The last number is a sequential number starting from 1 that maps to a specific property in your Google Analytics account. So if you set up a second Google Analytics property, the new code will change to UA-1234567-2.

You can find the Tracking ID for a Universal Analytics property under Admin » Property column. Navigate to Property Settings » Tracking ID tab where you can see your UA tracking ID.

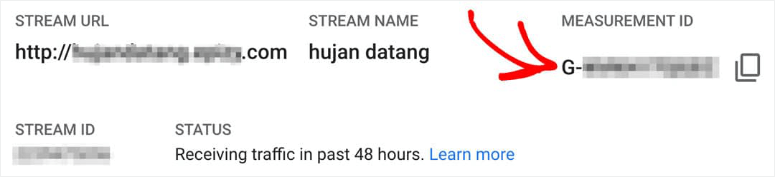

In GA4, you’ll see a Measurement ID instead of a Tracking ID. This starts with a capital G, a hyphen followed by a 10-character code.

It would look like this: G-SV0GT32HNZ.

To find your GA4 Measurement ID, go to Admin » Property » Data Streams. Click on a data stream. You’ll see your Measurement ID in the stream details after the Stream URL and Stream Name.

Data Streams vs Views

In UA, you could connect your website’s URL to a view. UA views are mostly used to filter data. So for instance, you can create a filter in a UA view to exclude certain IP addresses from reports.

GA4 uses data streams instead. You’ll need to connect your website’s URL to a data stream.

But don’t be mistaken, they are not the same as views.

Also, you can’t create a filter in GA4. In case your property was converted from UA to GA4, then you can find a read-only list of UA filters under Admin » Account » All Filters.

Now Google defines a data stream as:

“A flow of data from your website or app to Analytics. There are 3 types of data stream: Web (for websites), iOS (for iOS apps), and Android (for Android apps).”

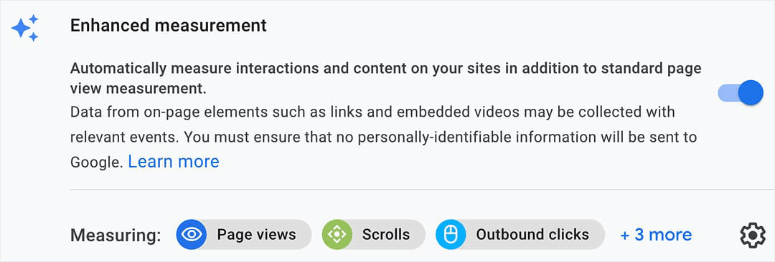

You can use your data stream to find your measurement ID and global site tag code snippet. You can also enable enhanced measurements such as your page views, scrolls, and outbound clicks.

In a data stream, you can do the following:

- Set up a list of domains for cross-domain tracking

- Create a set of rules for defining internal traffic rules

- Put together a list of domains to exclude from tracking

Data streams will make a lot of things easier. But there are 2 things that you need to be aware of. First, once you create a data stream, there’s no way to edit it. And if you delete a data stream, you can’t undo this action.

Events vs. Hit Types

UA tracks data by hit types which is essentially an interaction that results in data being sent to Analytics. This includes page hits, event hits, eCommerce hits, and social interaction hits.

GA4 moves away from the concept of hit types. Instead, it’s event-based meaning every interaction is captured as an event. This means everything including page, events, eCommerce transactions, social, and app view hits are all captured as events.

There’s also no option for creating conversion goals. But GA4 lets you flag or mark an event as a conversion with the flip of a toggle switch.

This is essentially the same thing as creating a conversion goal in Universal Analytics. You can also create new conversion events ahead of time before those events actually take place.

In GA4, Google organizes events into 4 categories and recommends that you use them in this order:

1. Automatically collected

In the first event category, there’s no option to turn on any setting for tracking events so you don’t need to activate anything here. Google will automatically collect data on these events:

- first_visit – the first visit to a website or Android instant app

- session_start – the time when a visitor opens a web page or app

- user_engagement – when a session lasts longer than 10 seconds or had 1 or more conversions or had 2 or more page views

Keep in mind that we’re only at the start of GA4. With Google’s ever-advancing and machine-learning technology, more automatically collected events may be added as the platform progresses.

2. Enhanced measurement

In this section, you don’t need to write any code but there are settings to turn on enhanced measurements. This will give you an extra set of automatically collected events.

To enable this data collection, you need to turn on the Enhanced measurement setting in your Data Stream.

Then you’ll see more enhanced measurement events that include:

- page_view: a page-load in the browser or a browser history state change

- click: a click on an outbound link that goes to an external site

- file-download: a click that triggers a file download

- scroll: the first time a visitor scrolls to the bottom of a page

3. Recommended

These GA4 events are recommended but aren’t automatically collected in GA4 so you’ll need to enable them if you want to track them.

We suggest you check out what is in the recommended events and turn on tracking for what you need. This can include signups, logins, and purchases.

Before we move to custom events, if you don’t see these 3 event types – automatically collected, enhanced measurement, and recommended – in your dashboard, you should ideally create a custom event for it.

4. Custom

Custom events let you set up tracking for any event that doesn’t fall into the above 3 categories. You can create and modify your events. So for instance, you can create custom events to track menu clicks.

You can design and write custom code to enable tracking for the event you want. But there is no guarantee that Google will support your custom metrics and events.

No Bounce Rate

The bounce rate metric has vanished! It’s been suggested that Google wants to focus on users that stay on your website rather than the ones that leave.

So this has likely been replaced with engagement rate metrics to collect more data on user interactions and engaged sessions.

No Custom Reports

UA properties have a customization menu for options to create dashboards, create custom reports, save existing reports, and create custom alerts.

A lot of this has changed in GA4. To make it easier for you to understand, here are the UA metrics and their GA4 equivalents:

- Custom reports can be found in the Explorations page.

- Saved reports are automatically created when you run an Exploration.

- Custom alerts can be set up inside custom Insights from the GA4 home page.

One more thing to note is that you also won’t find a way to link Google Search Console with a GA4 property (at the time of writing). And that’s all the key differences between Universal Analytics and Google Analytics 4.

Now you may be wondering whether you HAVE TO make the switch to GA4. A lot of our users have been asking us this question so we’ll tell you quickly what you need to do.

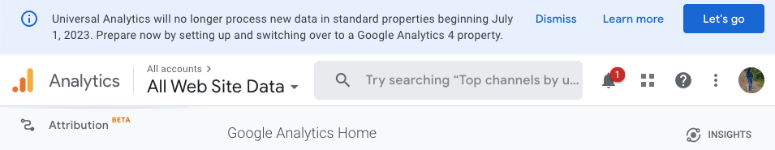

Do I Have To Switch to GA4?

Google will retire Universal Analytics in July 2023. You’ll have access to your UA data for some time but all new data will flow into GA4. If you have a US property set up, you’ll see this warning in your dashboard:

So you have to set up a GA4 property sooner or later and we recommend that you do it sooner. This is because your UA data won’t be transferred to GA4. You have to start afresh.

You can set up your GA4 property now and let it collect data. In the meantime, you can continue to use Universal Analytics and use the time to learn the new GA4 platform. Then when we’re all forced to make the switch, you’ll have plenty of historical data in your GA4 property.

If you haven’t set up your Google Analytics 4 property yet, we’ve compiled an easy step-by step guide for you: How to Set Up Google Analytics 4 in WordPress.

Want to skip the guide and use a tool? Then MonsterInsights is the best to set up GA4. It even lets you create dual tracking profiles so you can have both UA and GA4 running simultaneously.

After setting up GA4, you can go deeper into your data with these guides:

- How to Set Up WordPress Form Tracking in Google Analytics

- How to Track and Monitor User Activity in WordPress

- How to Track User Journey Before a User Submits a Form

These posts will help you track your users and their activity on your site so that you can get more valuable insights and analytics data to improve your site’s performance.

Source :

https://www.isitwp.com/google-analytics-4-vs-universal-analytics/

![Global Corporate PPA Volumes - Chart [June 2022].jpg](https://storage.googleapis.com/gweb-cloudblog-publish/images/Global_Corporate_PPA_Volumes_-_Chart_June_.max-2000x2000.jpg)