Researchers from Monash, Swinburne and RMIT universities have successfully tested and recorded Australia’s fastest internet data speed, and that of the world, from a single optical chip – capable of downloading 1000 high definition movies in a split second.

Published in the prestigious journal Nature Communications, these findings have the potential to not only fast-track the next 25 years of Australia’s telecommunications capacity, but also the possibility for this home-grown technology to be rolled out across the world.

In light of the pressures being placed on the world’s internet infrastructure, recently highlighted by isolation policies as a result of COVID-19, the research team led by Dr Bill Corcoran (Monash), Distinguished Professor Arnan Mitchell (RMIT) and Professor David Moss (Swinburne) were able to achieve a data speed of 44.2 Terabits per second (Tbps) from a single light source.

This technology has the capacity to support the high-speed internet connections of 1.8 million households in Melbourne, at the same time, and billions across the world during peak periods.

Demonstrations of this magnitude are usually confined to a laboratory. But, for this study, researchers achieved these quick speeds using existing communications infrastructure where they were able to efficiently load-test the network.

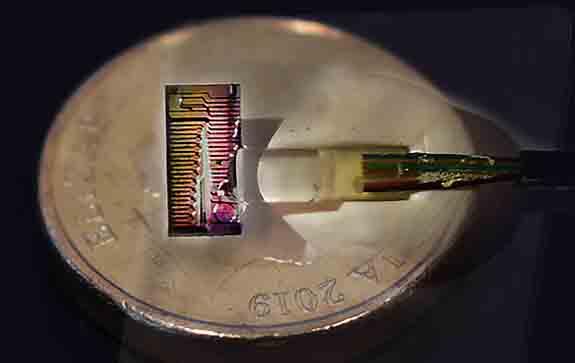

They used a new device that replaces 80 lasers with one single piece of equipment known as a micro-comb, which is smaller and lighter than existing telecommunications hardware. It was planted into and load-tested using existing infrastructure, which mirrors that used by the NBN.

The micro-comb chip over a A$2 coin. This tiny chip produces an infrared rainbow of light, the equivalent of 80 lasers. The ribbon to the right of the image is an array of optical fibres connected to the device. The chip itself measures about 3x5 mm.

It is the first time any micro-comb has been used in a field trial and possesses the highest amount of data produced from a single optical chip.

“We’re currently getting a sneak-peak of how the infrastructure for the internet will hold up in two to three years’ time, due to the unprecedented number of people using the internet for remote work, socialising and streaming. It’s really showing us that we need to be able to scale the capacity of our internet connections,” says Dr Bill Corcoran, co-lead author of the study and Lecturer in Electrical and Computer Systems Engineering at Monash University.

“What our research demonstrates is the ability for fibres that we already have in the ground, thanks to the NBN project, to be the backbone of communications networks now and in the future. We’ve developed something that is scalable to meet future needs.

“And it’s not just Netflix we’re talking about here – it’s the broader scale of what we use our communication networks for. This data can be used for self-driving cars and future transportation and it can help the medicine, education, finance and e-commerce industries, as well as enable us to read with our grandchildren from kilometres away.”

To illustrate the impact optical micro-combs have on optimising communication systems, researchers installed 76.6km of ‘dark’ optical fibres between RMIT’s Melbourne City Campus and Monash University’s Clayton Campus. The optical fibres were provided by Australia’s Academic Research Network.

Within these fibres, researchers placed the micro-comb – contributed by Swinburne, as part of a broad international collaboration – which acts like a rainbow made up of hundreds of high quality infrared lasers from a single chip. Each ‘laser’ has the capacity to be used as a separate communications channel.

Researchers were able to send maximum data down each channel, simulating peak internet usage, across 4THz of bandwidth.

Distinguished Professor Mitchell said reaching the optimum data speed of 44.2 Tbps showed the potential of existing Australian infrastructure. The future ambition of the project is to scale up the current transmitters from hundreds of gigabytes per second towards tens of terabytes per second without increasing size, weight or cost.

“Long-term, we hope to create integrated photonic chips that could enable this sort of data rate to be achieved across existing optical fibre links with minimal cost,” Distinguished Professor Mitchell says.

“Initially, these would be attractive for ultra-high speed communications between data centres. However, we could imagine this technology becoming sufficiently low cost and compact that it could be deployed for commercial use by the general public in cities across the world.”

Professor Moss, Director of the Optical Sciences Centre at Swinburne, says: “In the 10 years since I co-invented micro-comb chips, they have become an enormously important field of research.

“It is truly exciting to see their capability in ultra-high bandwidth fibre optic telecommunications coming to fruition. This work represents a world-record for bandwidth down a single optical fibre from a single chip source, and represents an enormous breakthrough for part of the network which does the heaviest lifting. Micro-combs offer enormous promise for us to meet the world’s insatiable demand for bandwidth.”

To download a copy of the paper, please visit: https://doi.org/10.1038/s41467-020-16265-x