27.07.2023

What is a HIPAA Audit?

A HIPAA audit is a thorough evaluation conducted to assess a healthcare organization’s compliance with the Health Insurance Portability and Accountability Act (HIPAA) regulations.

The main goal of the audit is to ensure that entities handling protected health information (PHI), such as hospitals, clinics, and health insurers, are adhering to the strict privacy and security standards set forth by HIPAA.

The audit examines various aspects, including privacy practices, data security measures, employee training, and risk management procedures.

By conducting HIPAA audits regularly, organizations can identify potential vulnerabilities, address compliance gaps, and safeguard sensitive patient data, fostering trust and confidentiality within the healthcare industry.

What Will Be Audited?

In a HIPAA audit, numerous aspects of an organization’s operations will be examined to assess compliance with HIPAA. The audit will typically review policies and practices related to the HIPAA Privacy, Security, and Breach Notification Rules, as well as physical, technical, and administrative safeguards protecting personal health information (PHI) and electronic health information (ePHI).

Who Is Eligible for a HIPAA Audit?

HIPAA audits target covered entities and business associates that handle PHI and ePHI. Covered entities include healthcare providers, health plans, and healthcare clearinghouses, while business associates are organizations or individuals that perform functions involving PHI on behalf of covered entities.

How Does The Selection Process Work?

The selection process for HIPAA audits involves multiple triggers. The OCR usually initiates audits in response to complaints or breach reports filed against a covered entity or business associate. Complaints can be raised by patients or employees concerning privacy violations or mishandling of PHI.

Additionally, breaches of PHI that meet certain criteria will lead to an audit. The OCR may also conduct follow-up audits for organizations with a history of prior non-compliance. Random audits are rare and typically reserved for larger, established entities due to the OCR’s limited resources.

When do HIPAA Audits Occur?

The timing of an audit can vary depending on the triggering event. The OCR usually provides advance notice to the organization being audited, informing them of the audit’s purpose, scope, and expected duration. Audits can take several weeks to several months to complete, depending on factors like the organization’s size and complexity.

What is my Risk of Being Audited?

The risk of being audited for HIPAA compliance varies depending on several factors. Organizations that have previously violated HIPAA, experienced breaches of PHI, or received complaints are at a higher risk of being audited.

To mitigate the risk of an audit, organizations should proactively invest time and effort into maintaining a comprehensive HIPAA compliance program, including regular self-audits and staff training to ensure adherence to HIPAA regulations and safeguard PHI.

How to Be Ready for an Audit in 12 Easy Steps

Whether you’re preparing for a financial, compliance, or HIPAA audit, this step-by-step approach will equip you with the knowledge and strategies needed to ensure a smooth and successful audit process.

Step 1: Assign a Privacy and Security Officer

The Privacy Officer plays a significant role in workforce training and education, ensuring that all staff members are well-versed in HIPAA compliance. They are responsible for monitoring privacy practices, developing security measures, and scheduling regular policy reviews.

In larger organizations, the role may be divided, with an Information Security Officer overseeing the company’s security program. The Privacy and Security Officer(s) are pivotal in creating and implementing a comprehensive compliance program that aligns with HIPAA regulations and ensures the protection of PHI and ePHI.

Step 2: Perform a Risk Analysis

A risk analysis involves identifying potential vulnerabilities and threats to your organization’s processes, systems, and data. By carefully assessing these risks, you can develop effective mitigation strategies and implement necessary safeguards to protect your organization from potential audit findings and ensure compliance with relevant regulations.

Step 3: Provide Employee Training

Educating your workforce on compliance policies, data security best practices, and the importance of safeguarding sensitive information is crucial.

By conducting regular training sessions and keeping comprehensive records of completed training, you can demonstrate your commitment to maintaining a well-informed and vigilant workforce, which significantly enhances your organization’s preparedness for an audit.

Step 4: Document All Locations Where PHI Is Stored

Document all physical and electronic storage sites, such as servers, databases, file cabinets, and even portable devices like laptops and smartphones.

By maintaining a comprehensive inventory of these locations and the PHI they contain, you demonstrate an organized approach to data management and enable auditors to verify that proper security measures are in place to protect PHI at all times.

Step 5: Review and Document HIPAA Policies and Procedures

Establish clear and well-defined procedures for responding to various requests related to privacy protection, access, correction, and transfers of Protected Health Information (PHI).

- Procedures for Responding to Requests for Privacy Protection – Your procedures should outline the steps to verify the identity of the requester, assess the validity of the request, and implement the necessary restrictions in accordance with HIPAA guidelines.

- Procedures for Responding to Requests for Access, Correction, and Transfers – Your procedures should define the process for handling these requests, including the timeframe within which the requests must be fulfilled and any associated fees, if applicable.

- Procedures for Maintaining an Accounting of Disclosures – Your organization should have well-documented procedures for recording and tracking such disclosures, ensuring accuracy, and being able to provide an accounting of disclosures to patients upon request.

Step 6: Report all Breaches

In the event of a breach of PHI, covered entities must act swiftly and responsibly to notify the affected individuals, the Department of Health and Human Services, and potentially the media, depending on the scale and severity of the breach.

Your breach reporting procedures should be well-defined, outlining the steps to be taken immediately after a breach is discovered. This includes conducting a thorough assessment of the incident to determine the extent of the breach and the types of information involved.

Once the assessment is complete, affected individuals should be promptly notified, providing them with essential details about the breach, potential risks, and steps they can take to protect themselves.

Additionally, covered entities must report the breach to the HHS through the OCR’s online breach reporting portal. The report should include specific information about the breach, such as the number of affected individuals, the types of PHI involved, and the steps taken to mitigate the risks and prevent future incidents.

The HHS may investigate the breach further, and the incident may become a subject of review during a HIPAA audit.

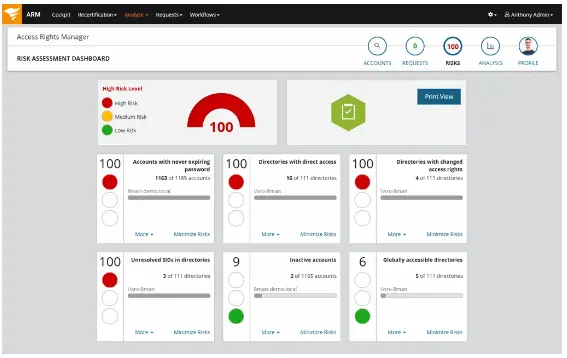

Step 7: Perform Regular Audits

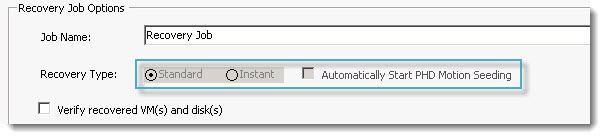

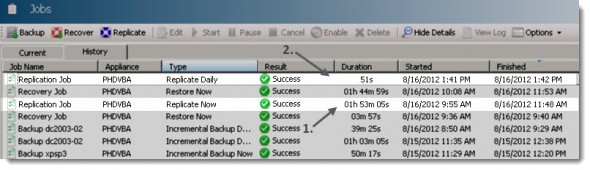

Internal assessments enable covered entities to proactively identify potential vulnerabilities, gaps, and areas of non-compliance within their operations. By conducting periodic audits, organizations can monitor their adherence to HIPAA policies and procedures, assess the effectiveness of their privacy and security measures, and make necessary adjustments to enhance data protection.

Regular audits also serve as valuable learning opportunities, fostering a culture of compliance and strengthening an organization’s ability to respond confidently to official HIPAA audits.

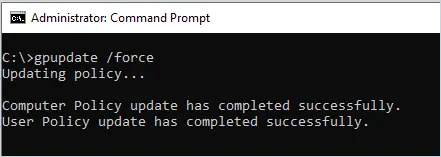

Step 8: Keep HIPAA Audit Logs

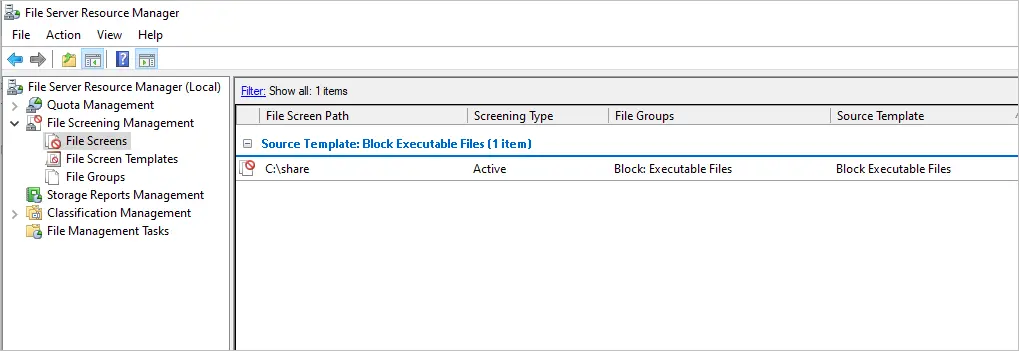

As mandated by the Security Rule, covered entities must implement hardware, software, and/or procedural mechanisms that continuously record and monitor activity within information systems containing or using ePHI.

These audit logs serve as an essential tool for tracking user access, detecting potential security breaches, and investigating any unauthorized or suspicious activities.

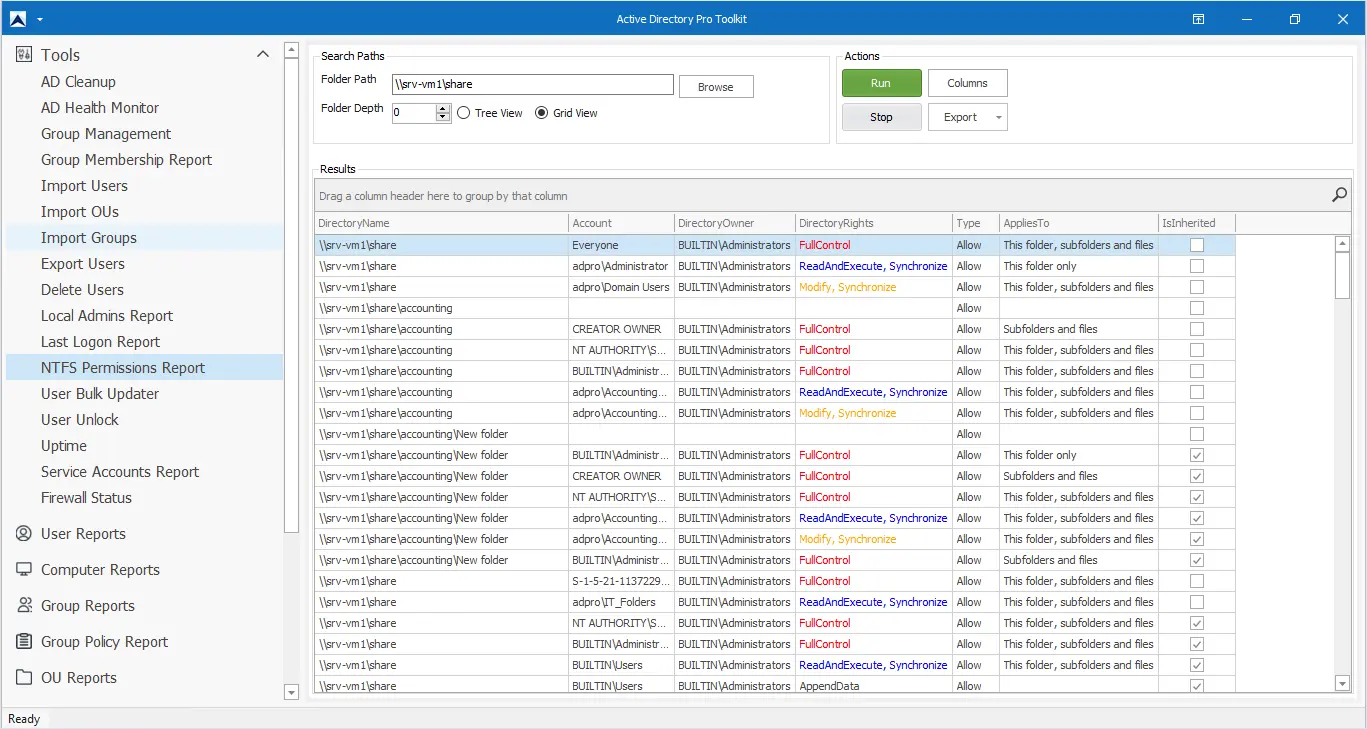

Step 9: Institute Role-Based Access Controls (RBAC)

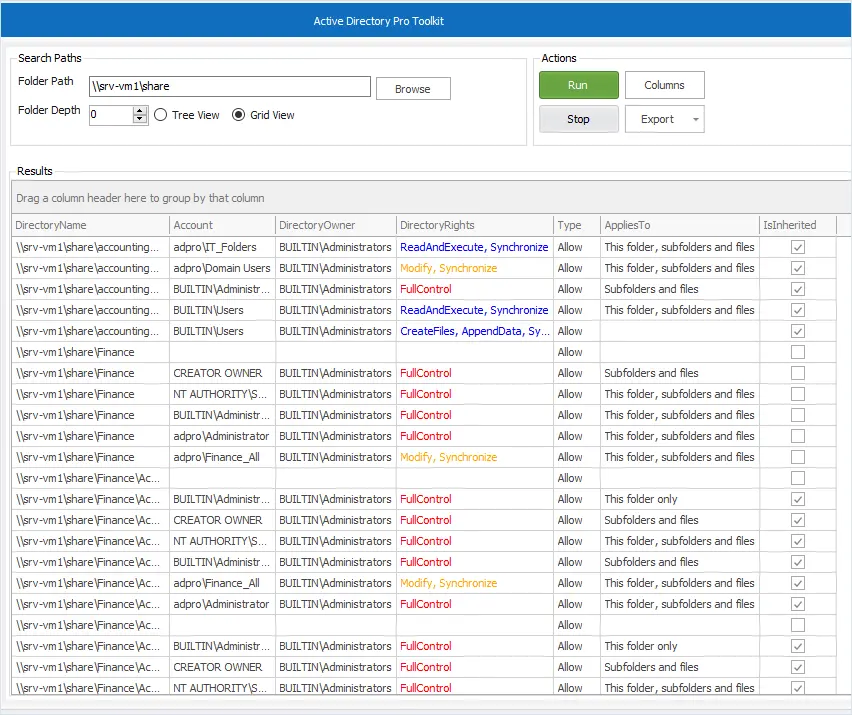

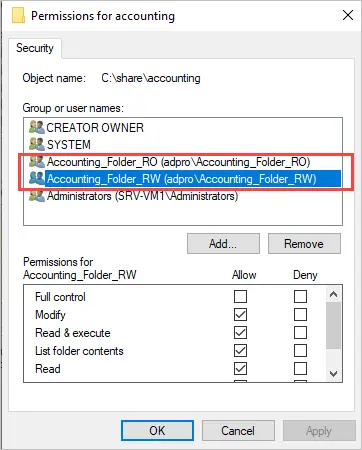

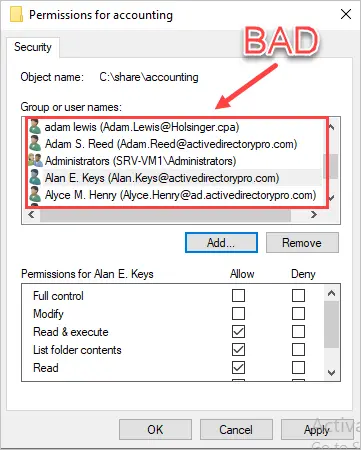

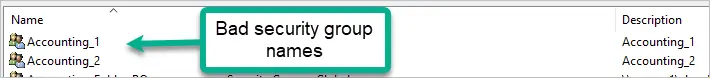

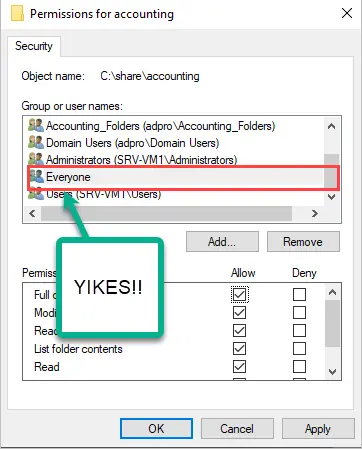

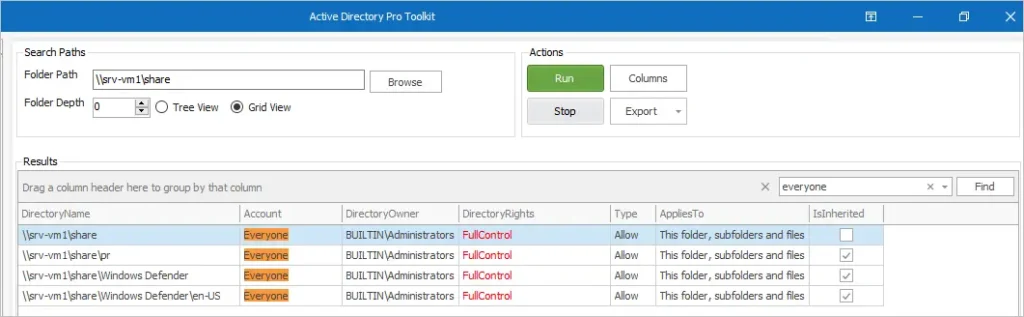

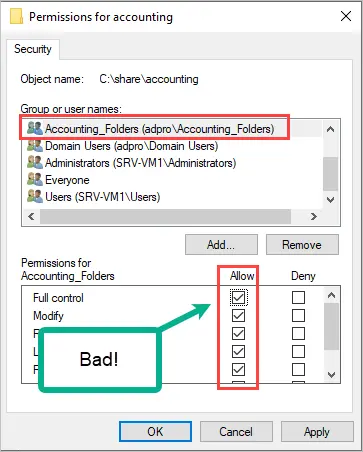

RBAC ensures that individuals within an organization have access only to the data necessary for their specific job functions. By assigning roles and permissions based on job responsibilities, organizations can minimize the risk of unauthorized access to ePHI.

RBAC enhances overall data protection, streamlines data management, and helps meet HIPAA compliance requirements, making it an essential safeguard in the healthcare industry.

Step 10: Have a Risk-Management / Emergency Action Plan In Place

Your plan should include a thorough risk assessment, identification of vulnerabilities, and strategies for prevention and response. By proactively addressing risks and defining proper procedures in case of data breaches, natural disasters, or other emergencies, healthcare organizations can ensure the continuity of critical services, protect patient information, and maintain HIPAA compliance.

Step 11: Review All Business Associate Agreements (BAAs)

BAAs outline the responsibilities and obligations of business associates regarding HIPAA compliance. Ensuring that BAAs accurately reflect current HIPAA requirements and cover all aspects of data protection is critical to maintaining a secure ecosystem for patient information.

Regular reviews and updates help enforce accountability and compliance among business associates, ultimately safeguarding the confidentiality and integrity of ePHI.

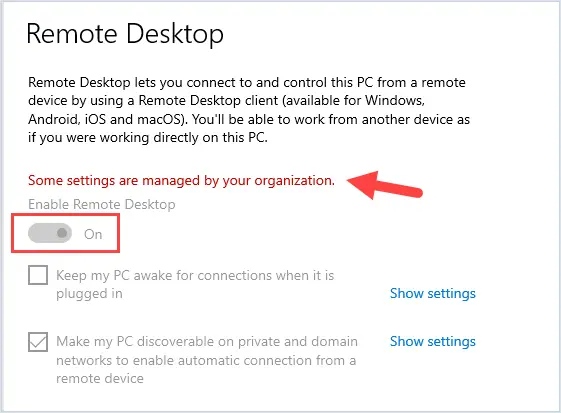

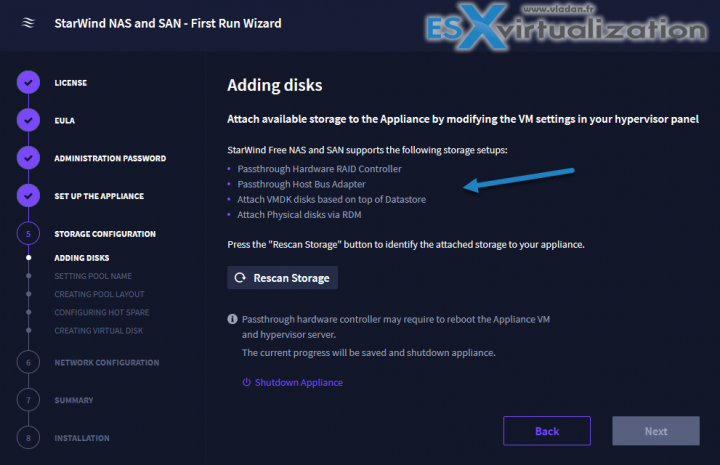

Step 12: Upgrade Your Network Security

Implementing advanced firewalls, intrusion detection systems, and data encryption protocols enhances the protection of sensitive health information from unauthorized access and data breaches.

Network segmentation, multi-factor authentication, and regular security assessments also play a vital role in bolstering the overall security posture. A robust network security infrastructure not only safeguards patient data but also ensures a HIPAA-compliant environment that instills trust among patients and stakeholders in the healthcare industry.

Perimeter81: Simplifying HIPAA Compliance with Secure Access Solutions

Perimeter81 is a leading provider of secure access service edge (SASE) solutions. The company’s platform plays a crucial role in assisting organizations with the HIPAA compliance audit process. One of the key challenges in achieving HIPAA compliance is ensuring that all data transmissions, including those containing ePHI, are secure, regardless of the user’s location or device.

Perimeter 81’s Zero Trust Network as a Service (NaaS) model ensures that data is always encrypted and authenticated, providing a secure tunnel for remote employees and preventing unauthorized access to sensitive information.

With Perimeter 81’s solution, healthcare organizations can enforce role-based access controls and granular user permissions. This feature enables organizations to define access policies based on the principle of least privilege, ensuring that employees, contractors, and business associates can only access the data required for their specific roles.

The platform’s centralized management console allows IT administrators to monitor and control user access, streamlining the audit process by providing detailed logs of user activities and access attempts. This audit logging capability is essential for demonstrating compliance during a HIPAA audit, as it ensures that every interaction with ePHI is tracked, recorded, and auditable, reducing the risk of potential HIPAA violations.

Furthermore, Perimeter 81’s solution offers advanced threat prevention and detection mechanisms, including intrusion prevention and detection systems (IPS/IDS) and behavior-based analytics. These features help healthcare organizations identify and mitigate security threats before they escalate into major incidents or breaches, contributing to the overall security posture and reducing the likelihood of data breaches that could trigger a HIPAA audit.

By leveraging Perimeter 81’s SASE platform, healthcare organizations can enhance their security measures, simplify compliance management, and confidently navigate the complexities of the HIPAA compliance audit process.

How Much Do HIPAA Audits Cost?

The cost of a HIPAA audit can vary depending on several factors. If a healthcare organization is selected for an official audit conducted by the Office for Civil Rights (OCR), there are no direct costs incurred by the audited organization.

However, there are indirect costs associated with preparing for the audit, such as hiring consultants, allocating staff time, and implementing any necessary improvements to achieve compliance. Additionally, organizations can choose to perform voluntary self-audits using external or internal auditors, which may involve fees ranging from a few thousand to tens of thousands of dollars, depending on the scope and duration of the audit.

How Long Does it Take to Complete a HIPAA Audit?

The duration of a HIPAA audit can vary based on several factors. Typically, the length of an audit depends on the scope of the investigation, the size and complexity of the organization being audited, and the presence of external entities that may complicate and extend the investigation.

On average, a HIPAA audit can take anywhere from several weeks to several months to complete. The OCR usually provides advance notice before conducting an audit, informing the audited organization of the purpose, scope, and expected duration of the audit.

In cases of follow-up audits or if significant issues are identified, the audit process may take longer to ensure that the organization has implemented the necessary corrective actions.

What Happens When You Get Audited?

When a HIPAA compliance audit is initiated, the Office for Civil Rights (OCR) typically begins by sending questionnaires to selected organizations to assess their compliance. Based on the responses received, the OCR decides whether to proceed with a thorough investigation of the organization’s adherence to HIPAA rules, specifically focusing on the confidentiality, integrity, and availability of PHI.

The audit report will outline the organization’s efforts and may identify any gaps or weaknesses in their system. After the audit, the OCR provides draft findings, and within 60 days, the organization must develop and revise policies and procedures, which must be approved by the HHS.

Implementing the updated policies within 30 days is crucial, as failure to verify or comply with the rules can lead to significant financial penalties. Consistent review and updates of HIPAA policies, staff training on security measures, and prompt issue resolution are key to maintaining compliance during a HIPAA audit.

Check out our HIPAA Compliance Checklist here.

FAQs

Does HIPAA require audits?

HIPAA itself does not explicitly require audits. However, the Department of Health and Human Services (HHS) Office for Civil Rights (OCR) conducts periodic audits to assess covered entities and business associates’ compliance with HIPAA regulations. These audits help ensure the protection of sensitive health information and identify potential vulnerabilities that may need to be addressed.

How often does HIPAA audit?

The frequency of HIPAA audits conducted by the OCR varies. In the past, the OCR has conducted both random and targeted audits. Random audits are less common and are typically conducted on a smaller scale due to resource limitations.

Targeted audits are usually triggered by complaints or breach reports and may focus on specific areas of non-compliance. The OCR uses its discretion to determine the scope and frequency of audits based on factors such as risk assessment, complaints, and breach incidents.

Does HIPAA require a third-party audit?

HIPAA does not explicitly mandate third-party audits. Covered entities and business associates can conduct internal self-assessments to evaluate their compliance with HIPAA regulations. However, some organizations may choose to undergo third-party audits as part of a proactive approach to ensure independent validation of their compliance efforts and to gain valuable insights from experts in the field.

Who conducts the HIPAA audit?

The HIPAA audits are primarily conducted by the Department of Health and Human Services (HHS) Office for Civil Rights (OCR). The OCR is responsible for enforcing HIPAA regulations and ensuring that covered entities and business associates adhere to the Privacy, Security, and Breach Notification Rules.

In some cases, the OCR may engage third-party auditors to assist with conducting audits, but the oversight and enforcement remain under the purview of the OCR.

How do you prove HIPAA compliance?

Proving HIPAA compliance involves demonstrating that your organization has implemented policies, procedures, and safeguards to protect sensitive health information effectively. This includes having comprehensive documentation of risk assessments, security measures, workforce training, incident response plans, and business associate agreements.

Regular self-audits, risk analyses, and ongoing monitoring are crucial in providing visible demonstrable evidence of compliance. In the event of a HIPAA audit, organizations should be prepared to present these records and demonstrate their commitment to protecting the privacy and security of personal health information.

Source :

https://www.perimeter81.com/blog/compliance/hipaa-compliance-audit