Companies are increasingly using Cloud services to support their business processes. But which types of Cloud services are there, and what is the difference? Which kind of Cloud service is most suitable for you? Do you want to be unburdened or completely in control? Do you opt for maximum cost savings, or do you want the entire arsenal of possibilities and top performance? Can you still see the forest for the trees? In this article and in the next, I describe several different Cloud services, what the differences and features are and what exactly you need to pay attention to.

Let’s start with the definition of Cloud computing. This is the provision of services using the internet (Cloud). Think of storage, software, servers, databases etc. Depending on the type of service and the service that is offered (think of license management or data storage), you can divide these services into categories. Examples are IaaS (Infrastructure as a Service), PaaS (Platform as a Service), SaaS (Software as a Service), etc. These services are provided by a cloud provider. Whether this is Microsoft (Azure), Amazon (AWS), or another vendor (Google, Alibaba, Oracle, etc.), each vendor offers Cloud services that fall under one of the categories of Cloud services that we are about to discuss.

One feature of Cloud computing is that you pay according to the usage and the service you purchase. For example, for SaaS, you pay for the software’s license and support. This also means that if you buy a SaaS service (e.g., Office 365) and don’t use it, you will still be charged. At the same time, if you purchase storage with IaaS, for example, you only pay for the amount of storage you use, possibly supplemented with additional services such as backup, etc.

Sometimes Cloud services complement each other; think, for example, of DaaS (Database as a Service), where a database is offered via the Cloud. Often you need an application server and other infrastructure to read data from this database. These usually run in a Landing Zone, purchased from an IaaS service. But some services can also be standalone, for example, SaaS (Office 365).

Each Cloud service has specific characteristics. Sometimes it requires little or no (technical) knowledge, but it can also be challenging to manage and use the services according to best practices. This often depends on the degree to which you want to see yourself in control. If you want an application from the Cloud where you are completely relieved of all worries, this requires little technical knowledge from the user or the administrator. But if you want maximum control, then IaaS gives you an enormous range of possibilities. In this article, you can read what you need to consider.

It is advisable to think beforehand about what your requirements and wishes are precisely and whether this fits in with the service you want to purchase. If you wish to use an application in the Cloud but use many custom settings, this is often not possible. If you don’t want to be responsible for updating and backing up an application and use little or no customization, a SaaS can be very interesting. Also, look at how a service fits into your business process. Does it offer possibilities for automation, reporting, or disaster recovery? Are there possibilities to temporarily allocate extra resources in case of peak demand (horizontal or vertical scaling up), and what guarantees does the supplier offer with this service? Think of RPO / RTO and accessibility of the service desk in case of a calamity.

Let’s get started quickly!

IaaS (Infrastructure as a Service)

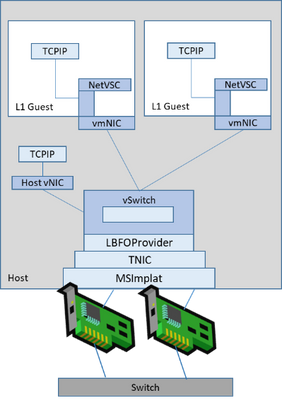

One of the best-known Cloud services is undoubtedly IaaS. For many companies, this is often their first introduction to a Cloud service. You rent the infrastructure from a cloud provider. For example, the network infrastructure, virtual servers (including operations system), and storage. A feature of IaaS is that you have complete control – Both on the management side and how you can deploy resources (requests). This can be done in various automated ways (Powershell, IaC, DevOps pipelines, etc.) and via the classic management interface that all providers offer. Things that are often not possible with a PaaS service are possible with an IaaS service. You have complete control. In principle, you can set up a complete server environment (all services are available for this), but you do have the benefits of the Cloud, such as scalability and pay per use or per resource.

IaaS therefore, most resembles an on-premise implementation. You often see this used in combination with the use of virtual servers. Critical here is a good investigation into the possible limitations, for example, I/O, so that the performance can be different in practice than in a traditional local environment. You are responsible for arranging security and backup. The advantage is that you have an influence on the choice of technology used. You can customize the setup according to your needs and wishes. You can standardize the configuration to your organization. Deployment can be complex, and you are forced to make your own choices, so some expertise is needed.

PaaS (Platform as a Service)

PaaS stands for Platform as a service and goes further than IaaS. You get a platform where you can do the configuration yourself. When you use a PaaS service, the vendor takes care of the sub-layer (IaaS) and the operating system and middleware. So you sacrifice something in terms of control and capabilities. PaaS services are ideal for developers, web and application builders. After all, you can quickly make an environment available. Using it means you no longer have to worry about the infrastructure, operating system, and middleware. This is taken care of by the supplier based on best practices. This also offers security advantages, as you do not have to think about patching and upgrading these things that are now done by the vendor.

Another advantage is that you can entirely focus on what you want to do and not on managing the environment. You can also easily purchase additional services and quickly scale them up or down. When you are finished, you can remove and stop the resources, so you have no more costs.

However, do take into account the use of existing software. Not all existing software is suitable to function in a PaaS environment; for example, in a PaaS environment, you do not have full access (after all, the vendor is responsible). Also, not all CPU power and memory are allocated to the Cloud application. This is because it is often hosted on a shared platform, so other applications (and databases) may use the same resources. As for the database, you have the same advantages and disadvantages as with DBaaS.

SaaS (Software as a Service)

This is probably a service you’ve been using for a while. In short, you take applications through the Cloud on a subscription basis. The provider is responsible for managing the infrastructure, patches, and updates. A SaaS solution is ready for use immediately, and you directly benefit from the added value, such as fast scaling up and down and paying per use. Examples are Office365, Sharepoint online, SalesForce, Exact Online, Dropbox, etc.

Unlike IaaS and PaaS, where there is still a lot of freedom, and you have to set everything up yourself, with SaaS however, it is immediately clear what you are buying and what you will get. With this service, you are relieved of most of your worries. The vendor is responsible for all updates, patches, development, and more. You cannot make any updates or changes to the software with this service.

Many companies use one or more SaaS services often even within companies, there is a distinction. For example, each department within a company has its specific applications and associated SaaS services. With this service, you only pay for what you need, including the licenses. These licenses can easily be scaled up or down.

It is interesting for many companies to work with SAAS solutions. It is particularly interesting for start-ups, small companies and freelancers because you only purchase what you use, you don’t have unnecessarily high start-up costs, and you don’t have to worry about the maintenance of the software.

But SAAS can also be a perfect solution for larger companies. For example, if you hire extra staff for specific periods, you can quickly get these people working with the software they need. You buy several additional licenses, and you can stop this when the temporary staff leaves.

How can Vembu help you?

BDRSuite, is a comprehensive Backup & DR solution designed to protect your business-critical data across Virtual (VMware, Hyper-V), Physical Servers (Windows, Linux), SaaS (Microsoft 365, Google Workspace), AWS EC2 Instances, Endpoints (Windows, Mac) and Applications & Databases (MS Active Directory, MS Exchange, MS Outlook, SharePoint, MS SQL, MySQL).

To protect your workloads running on SaaS (Microsoft 365, Google Workspace), try out a full-featured 30-days Free Trial of the latest version of BDRSuite.

Source :

https://www.vembu.com/blog/saas-vs-paas-vs-iaas-whats-the-difference-how-to-choose/

” button next to the device to display the device IP and SmartURL.

” button next to the device to display the device IP and SmartURL.