Windows Hyper-V Server is a free hypervisor platform by Microsoft to run virtual machines. In this article, we’ll look on how to install and configure the latest version of Windows Hyper-V Server 2019 released in summer 2019 (this guide also applies to Windows Hyper-V Server 2016).

Hyper-V Server 2019 is suitable for those who don’t want to pay for hardware virtualization operating system. The Hyper-V has no restrictions and is free. Windows Hyper-V Server has the following benefits:

- Support of all popular OSs. There are no compatibility problems. All Windows and modern Linux and FreeBSD operating systems have Hyper-V support.

- A lot of different ways to backup virtual machines: simple scripts, open-source software, free and commercial versions of popular backup programs.

- Although Hyper-V Server does not have a GUI Windows Server (graphical management interface), you can manage it remotely using standard Hyper-V Manager that you can install on any computer running Windows. Now it also has a web access using the Windows Admin Center.

- Hyper-V Server is based on a popular server platform, familiar and easy to work with.

- You can install Hyper-V on a pseudoRAID, e. g., Inter RAID controller, Windows software RAID.

- You do not need to license your hypervisor, it is suitable for VDI or Linux VMs.

- Low hardware requirements. Your processor must support software virtualization (Intel-VT or VMX by Intel, AMD-V (SVM) by AMD) and second-level address translation (SLAT) (Intel EPT or AMD RV). These processor options must be enabled in BIOS/UEFI/nested host. You can find full system requirements on Microsoft website.

You should distinguish between Windows Server 2016/2019 with the standard Hyper-V role and Free Hyper-V Server 2019/2016. These are different products.

It is worth to note that if you are using a free hypervisor, you are still responsible for licensing your virtual machines. You can run any number of VMs running any opensource OS, like Linux, but you have to license your Windows virtual machines. Desktop Windows editions are licensed with a product key, and if you are using Windows Server as a guest OS, you must license it by the nuber of physical cores on your host. See more details on Windows Server licensing in virtual environment here.Contents:

- What’s New in Hyper-V Server 2019?

- How to Install Hyper-V Server 2019/2016?

- Using Sconfig Tool for Hyper-V Server Basic Configuration

- Hyper-V Server 2019 Remote Management

- Using PowerShell to Configure Hyper-V Server 2019

- How to Configure Hyper-V Server 2019 Network Settings from PowerShell?

- Hyper-V Server Remote Management Firewall Configuration

- Configuring Hyper-V Storage for Virtual Machines

- How to Configure Hyper-V Server Host Settings via PowerShell?

- Creating Hyper-V Virtual Switch

What’s New in Hyper-V Server 2019?

Let’s consider new Hyper-V Server 2019 features in brief:

- Shielded Virtual Machines support for Linux appeared;

- VM configuration version 9.0 (with hibernation support);

- ReFS deduplication support;

- Core App Compatibility: the ability to run additional graphic management panels in the Hyper-V server console;

- Support of 2-node Hyper-V cluster and cross-domain cluster migration

How to Install Hyper-V Server 2019/2016?

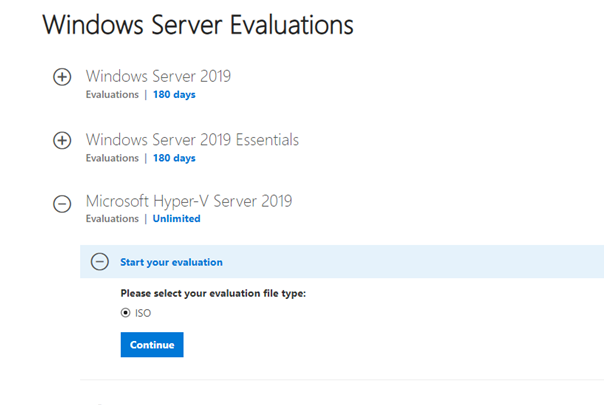

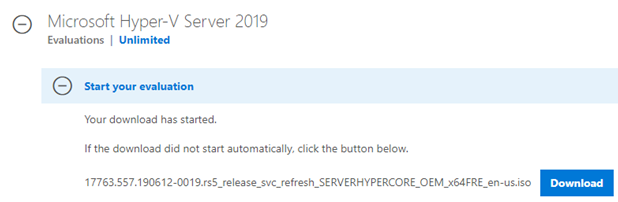

You can download Hyper-V Server 2019 ISO install image here: https://www.microsoft.com/en-us/evalcenter/evaluate-hyper-v-server-2019.

After you click Continue, a short registration form will appear. Fill in your data and select the language of the OS to be installed. Wait till the Hyper-V image download is over. The .iso file size is about 2.81GB.

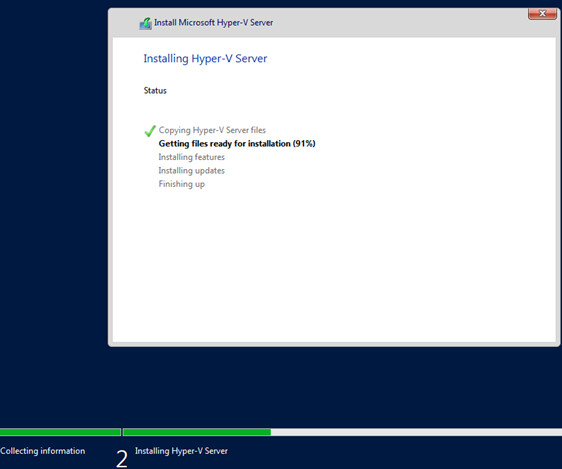

Microsoft Hyper-V Server installation is standard and intuitive. It goes like in Windows 10. Just boot your server (computer) from the ISO image and follow the instructions of the installation wizard.

Using Sconfig Tool for Hyper-V Server Basic Configuration

After the installation, the system will prompt you to change the administrator password. Change it, and you will get to the hypervisor console.

Please note that Hyper-V Server does not have a familiar Windows GUI. You will have to configure most settings through the command line.

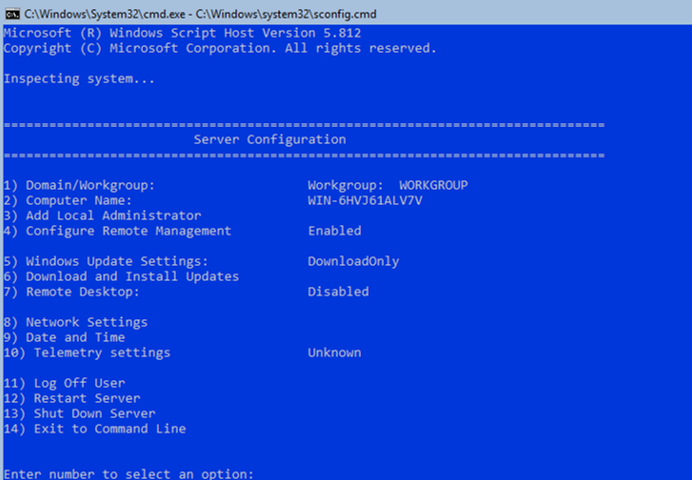

There are two windows on the desktop — the standard command prompt and the sconfig.cmd script window. You can use this script to perform the initial configuration of your Hyper-V server. Enter the number of the menu item you are going to work with in the “Enter number to select an option:” line.

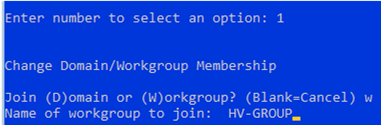

- The first menu item allows you to join your server to an AD domain or a workgroup. In this example, we’ll join the server to the workgroup called HV-GROUP.

- Change a hostname of your server.

- Create a local administrator user (another account, besides the built-in administrator account). I’d like to note that when you enter the local administrator password, the cursor stays in the same place. However, the password and its confirmation are successfully entered.

- Enable the remote access to your server. Thus, you will be able to manage it using Server Manager, MMC and PowerShell consoles, connect via RDP, check its availability using ping or tracert.

- Configure Windows Update. Select one of the three modes:

- Automatic (automatic update download and installation)

- DownloadOnly (only download without installation)

- Manual (the administrator decides whether to download or install the updates)

- Download and install the latest security updates.

- Enable RDP access with/without NLA.

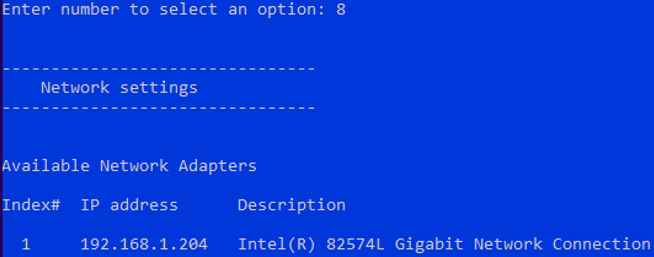

- Configure your network adapter settings. By default, your server receives the IP address from DHCP server. It is better to configure the static IP address here.

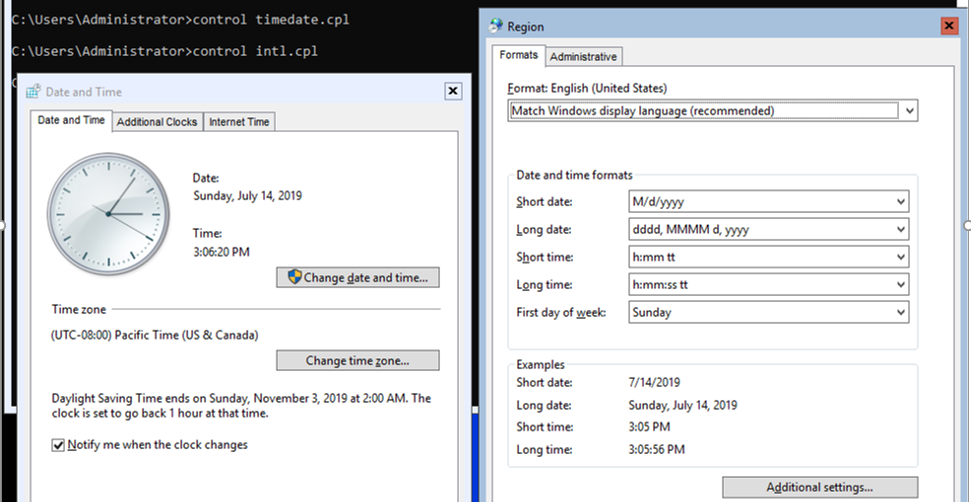

- Set the date and time of your system.

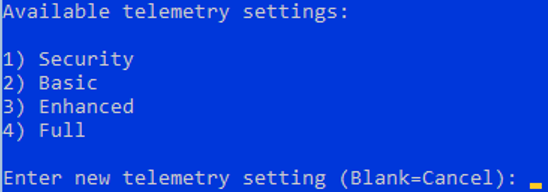

- Configure the telemetry. The Hyper-V won’t allow you to disable it completely. Select the mode you want.

You can also configure the date, time and time zone using the following command:

control timedate.cpl

Regional parameters:

control intl.cpl

These commands open standard consoles.

Note! If you have closed all windows and seen the black screen, press Ctrl+Shift+Esc. This key combination works in an RDP session as well and runs the Task Manager. You can use it to start the command prompt or the Hyper-V configuration tool (click File -> Run Task -> cmd.exe or sconfig.cmd).

Hyper-V Server 2019 Remote Management

To conveniently manage Free Hyper-V Server 2019 from the graphic interface, you can use:

- Windows Admin Center

- Hyper-V Manager — this is the method we’ll consider further (as for me, it is more convenient than WAC, at least so far)

To manage the Hyper-V Server 2016/2019, you will need a computer running Windows 10 Pro or Enterprise x64 edition.

Your Hyper-V server must be accessible by its hostname; and the A record must correspond to it on the DNS server in your domain network. In a workgroup, you will have to create the A record manually on your local DNS or add it to the hosts file on a client computer. In our case, it looks like this:

192.168.2.50 SERVERHV

If the account you are using on a client computer differs from the Hyper-V administrator account (and it should be so), you will have to explicitly save your credentials used to connect to the Hyper-V server. To do it, run this command:

cmdkey /add: SERVERHV /user:hvadmin /pass:HVPa$$word

We have specified the host and the credentials to access Hyper-V. If you have more than one server, do it for each of them.

Then start PowerShell prompt as administrator and run the following command:

winrm quickconfig

Answer YES to all questions, thus you will configure automatic startup of WinRM service and enable remote control rules in your firewall.

Add your Hyper-V server to the trusted hosts list:

Set-Item WSMan:\localhost\Client\TrustedHosts -Value "SERVERHV"

If you have multiple servers, add each of them to trusted hosts.

Run the dcomcnfg from the command prompt, and expand the Component Services -> Computers -> My Computer in it. Right-click here, select Properties and go to COM Security -> Access Permissions -> Edit Limits. In the next window check Remote Access permissions for ANONYMOUS ACCESS user.

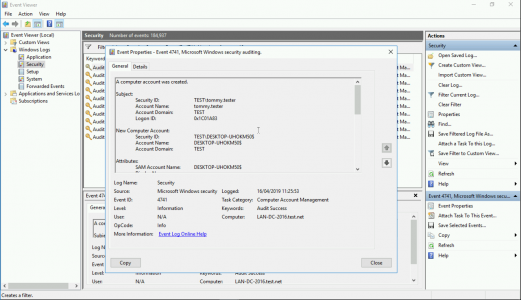

Then let’s try to connect to the remote server. Run the Computer Management console (compmgmt.msc), right-click on the console root and select Connect to another computer.

Now you can manage the Task Scheduler, disks, services and view the event log using standard MMC consoles.

Install Hyper-V Manager on Windows 10. Open Programs and Features (optionalfeatures.exe) and go to Turn Windows Features on or off. In the next window, find Hyper-V and check Hyper-V Management Tools to install it.

The Hyper-V Manager snap-in will be installed. Start it and connect to your Hyper-V server.

Using the Hyper-V Manager to manage the hypervisor is generally beyond question. Then I’ll tell about some ways to manage a Hyper-V Server from PowerShell.

Using PowerShell to Configure Hyper-V Server 2019

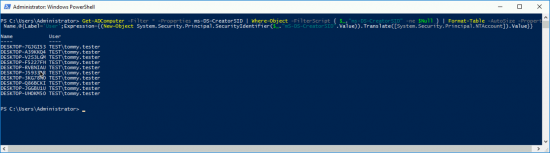

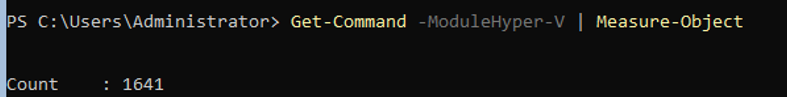

I recommend using PowerShell to configure your Hyper-V Server. Hyper-V module provides over 1,641 cmdlets to manage a Hyper-V server.

Get-Command –ModuleHyper-V | Measure-Object

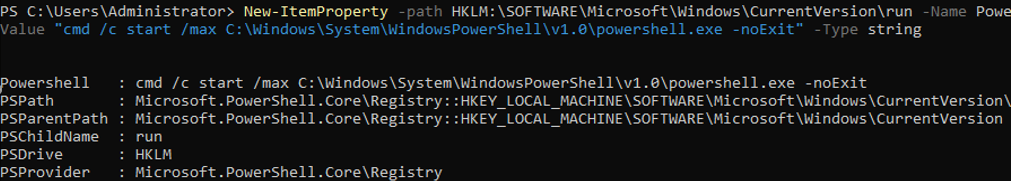

Configure the automatic start of the PowerShell console after logon.

New-ItemProperty -path HKLM:\SOFTWARE\Microsoft\Windows\CurrentVersion\run -Name PowerShell -Value "cmd /c start /max C:\Windows\system32\WindowsPowerShell\v1.0\powershell.exe -noExit" -Type string

After logging into the server, a PowerShell window will appear.

How to Configure Hyper-V Server 2019 Network Settings from PowerShell?

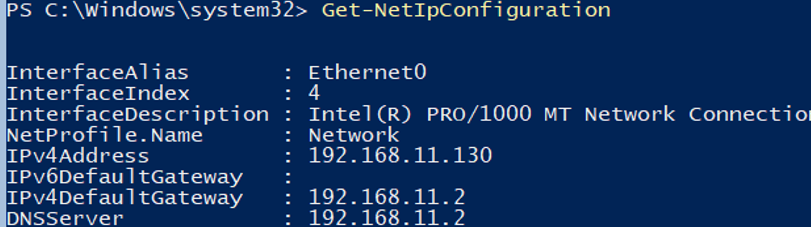

If you have not configured the network settings using sconfig.cmd, you configure them through PowerShell. Using Get-NetIPConfiguration cmdlet, you can view the current IPs configuration of network interface.

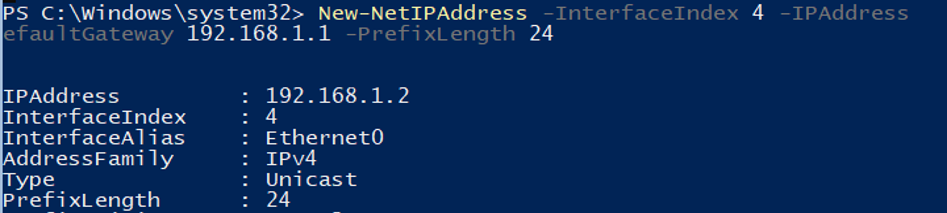

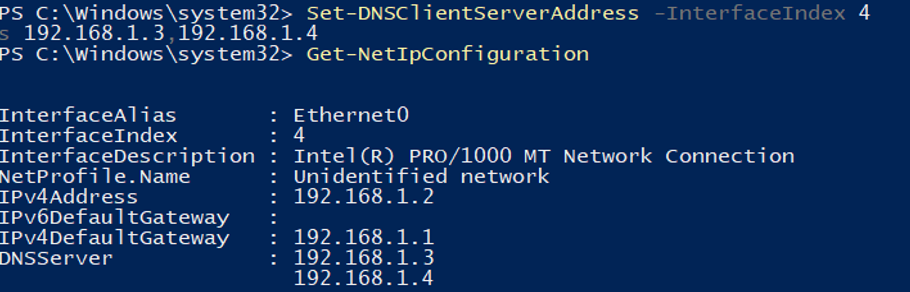

Assign a static IP address, network mask, default gateway and DNS server addresses. You can get the network adapter index (InterfaceIndex) from the results of the previous cmdlet.

New-NetIPAddress -InterfaceIndex 4 -IPAddress 192.168.1.2 -DefaultGateway 192.168.1.1 -PrefixLength 24

Set-DnsClientServerAddress -InterfaceIndex 4 -ServerAddresses 192.168.1.3,192.168.1.4

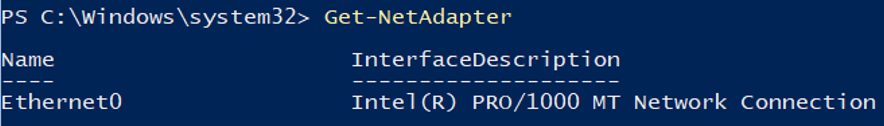

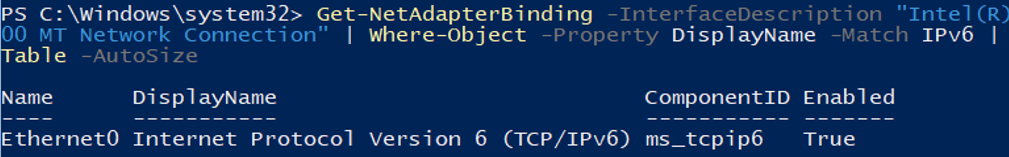

To configure IPv6, get the interface name using the Get-NetAdapter cmdlet from the PowerShell NetTCPIP module.

Check the current IPv6 setting using the following command:

Get-NetAdapterBinding -InterfaceDescription "Intel(R) PRO/1000 MT Network Connection" | Where-Object -Property DisplayName -Match IPv6 | Format-Table –AutoSize

You can disable IPv6 as follows:

Disable-NetAdapterBinding -InterfaceDescription "Intel(R) PRO/1000 MT Network Connection " -ComponentID ms_tcpip6

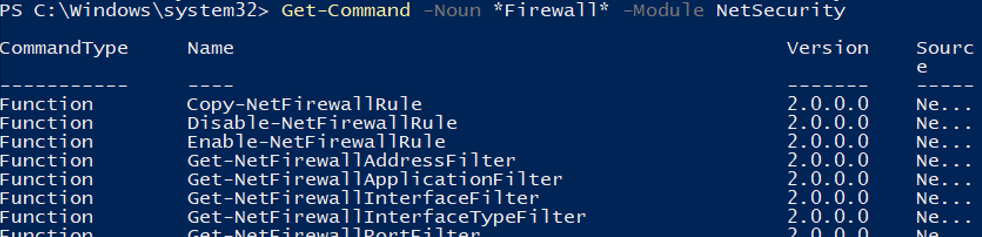

Hyper-V Server Remote Management Firewall Configuration

You can view the list of cmdlets to manage Windows Firewall using Get-Command:

Get-Command -Noun *Firewall* -Module NetSecurity

To fully manage your server remotely, run the following commands one by one to enable Windows Firewall allow rules :

Enable-NetFireWallRule -DisplayName "Windows Management Instrumentation (DCOM-In)"

Enable-NetFireWallRule -DisplayGroup "Remote Event Log Management"

Enable-NetFireWallRule -DisplayGroup "Remote Service Management"

Enable-NetFireWallRule -DisplayGroup "Remote Volume Management"

Enable-NetFireWallRule -DisplayGroup "Windows Firewall Defender Remote Management"

Enable-NetFireWallRule -DisplayGroup "Remote Scheduled Tasks Management"

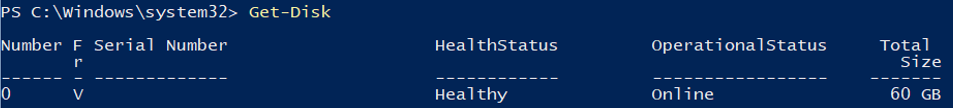

Configuring Hyper-V Storage for Virtual Machines

We will use a separate partition on a physical disk to store data (virtual machine files and iso files). View the list of physical disks on your server.

Get-Disk

Create a new partition of the largest possible size on the drive and assign the drive letter D: to it. Use the DiskNumber from Get-Disk results.

New-Partition -DiskNumber 0 -DriveLetter D –UseMaximumSize

Then format the partition as NTFS and specify its label:

Format-Volume -DriveLetter D -FileSystem NTFS -NewFileSystemLabel "VMStorage"For more information about disk and partition management cmdlets in PowerShell, check the article PowerShell Disks and Partitions Management.

Create a directory where you will store virtual machine settings and vhdx files. The New-Item cmdlet allows you to create nested folders:

New-Item -Path "D:\HyperV\VHD" -Type Directory

Create D:\ISO folder to store OS distributions images (iso files):

New-Item -Path D:\ISO -ItemType Directory

To create a shared network folder, use the New-SmbShare cmdlet and grant full access permissions to the group of local administrators of your server:

New-SmbShare -Path D:\ISO -Name ISO -Description "OS Distributives" -FullAccess "BUILTIN\Administrators"

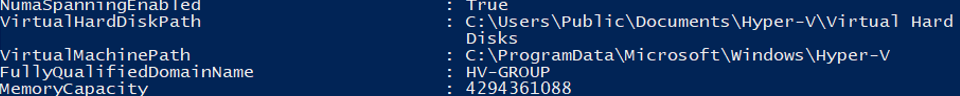

How to Configure Hyper-V Server Host Settings via PowerShell?

Open the Hyper-V Server host settings using this command:

Get-VMHost | Format-List

The paths of virtual machines and virtual disks are located on the same partition as your operation system. It is not correct. Specify the path to the folders created earlier using this command:

Set-VMHost -VirtualMachinePath D:\Hyper-V -VirtualHardDiskPath 'D:\HyperV\VHD'

Creating Hyper-V Virtual Switch

Create the External Switch that is connected to the Hyper-V Server phisical NIC and enable VM interaction with the physical network.

Check the SR-IOV (Single-Root Input/Output (I/O) Virtualization) support:

Get-NetAdapterSriov

Get the list of connected network adapters:

Get-NetAdapter | where {$_.status -eq "up"}

Connect your virtual switch to the network adapter and enable SR-IOV support if it is available.Hint. You won’t be able to enable or disable SR-IOV support after you create the vswitch, and you will have to re-create the switch to change this parameter.

New-VMSwitch -Name "Extenal_network" -NetAdapterName "Ethernet 2" -EnableIov 1

Use these cmdlets to check your virtual switch settings:

Get-VMSwitch

Get-NetIPConfiguration –Detailed

This completes the initial setup of Windows Hyper-V Server 2016/2019. You can move on to create and configure your virtual machines.

Source :

http://woshub.com/install-configure-free-hyper-v-server/