Working in infrastructure has been a blast since I went down that route many years ago. One of the most enjoyable things in this line of work is learning about cool tech and playing around with it in a VMware homelab project for instance. Running a homelab involves sacrificing some of your free time and dedicating it to learning and experimenting.

Now, it is obvious that learning without a purpose is a tricky business as motivation tends to fade quite quickly. For that reason, it is best to work towards a goal and use your own hardware to conduct a VMware homelab project that will get you a certification, material to write interesting blogs, automate things in your home or follow a learning path to aim for a specific job or a different career track. When interviewing for engineering roles, companies are receptive to candidates that push the envelope to sharpen their skills and don’t fear investing time and money to get better.

This article is a bit different than usual as we, at Altaro, decided to have a bit of fun! We asked our section editors, authors, as well as third-party authors to talk about their homelabs. We set a rough structure regarding headlines to keep things consistent but we also wanted to leave freedom to the authors as VMware homelab projects are all different and serve a range of specific purposes.

Brandon Lee

In my honest opinion, it is one of the best investments in the learning and career goals I have made – a home lab. However, as the investment isn’t insignificant, why would I recommend owning and running a home lab environment? What do you use it for? What considerations should you make when purchasing equipment and servers?

Around ten years ago, I decided that having my own personal learning environment and sandbox would benefit all the projects and learning goals I had in mind. So, the home lab was born! Like many IT admins out there, my hobby and my full-time job are geeking out on technology. So, I wanted to have access at home to the same technologies, applications, and server software I use in my day job.

Why do you have a lab?

Like many, I started with a “part-time” VMware homelab project running inside VMware Workstation. So, the first hardware I purchased was a Dell Precision workstation with 32 gigs of memory. Instead of running vSphere on top of the hardware, I ran VMware Workstation. I believe this may have been before the VMUG Advantage subscription was available, or at least before I knew about it.

I would advise anyone thinking of owning and operating a home lab to start small. Running a lab environment inside VMware Workstation, Hyper-V, Virtualbox, or another solution is a great way to get a feel for the benefits of using a home lab environment. It may also be that a few VMs running inside VMware Workstation or another workstation-class hypervisor is all you need.

For my purposes, the number of workloads and technologies I wanted to play around with outgrew what I was able to do inside VMware Workstation. So, after a few years of running VMware Workstation on several other workstation-class machines, I decided to invest in actual servers. The great thing about a home lab is you are only constrained in its design by your imagination (and perhaps funds). Furthermore, unlike production infrastructure, you can redesign and repurpose along the way as you see fit. As a result, the home lab can be very fluid for your needs.

What’s your setup?

I have written quite a bit about my home lab environment, detailing hardware and software. However, I am a fan of Supermicro servers for the hardware side of things. I have found the Supermicro kits to be very stable, affordable, and many are supported on VMware’s HCL for installing vSphere, etc.

Enclosure

- Sysracks 27U server enclosure

Servers

I have the following models of Supermicro servers:

- (4) Supermicro SYS-5028D-TN4T

- Mini tower form factor

- (3) are in a vSAN cluster

- (1) is used as a standalone host in other testing

- (1) SYS-E301-9D-8CN8TP

- Mini 1-U (actually 1.5 U) form factor

- This host is used as another standalone host for various testing and nested labs

Networking

- Cisco SG350-28 – Top of rack switch for 1 gig connectivity with (4) 10 gig SFP ports

- Ubiquiti – Edgeswitch 10 Gig, TOR for Supermicro servers

- Cisco SG300-20 – Top of rack IDF

Storage

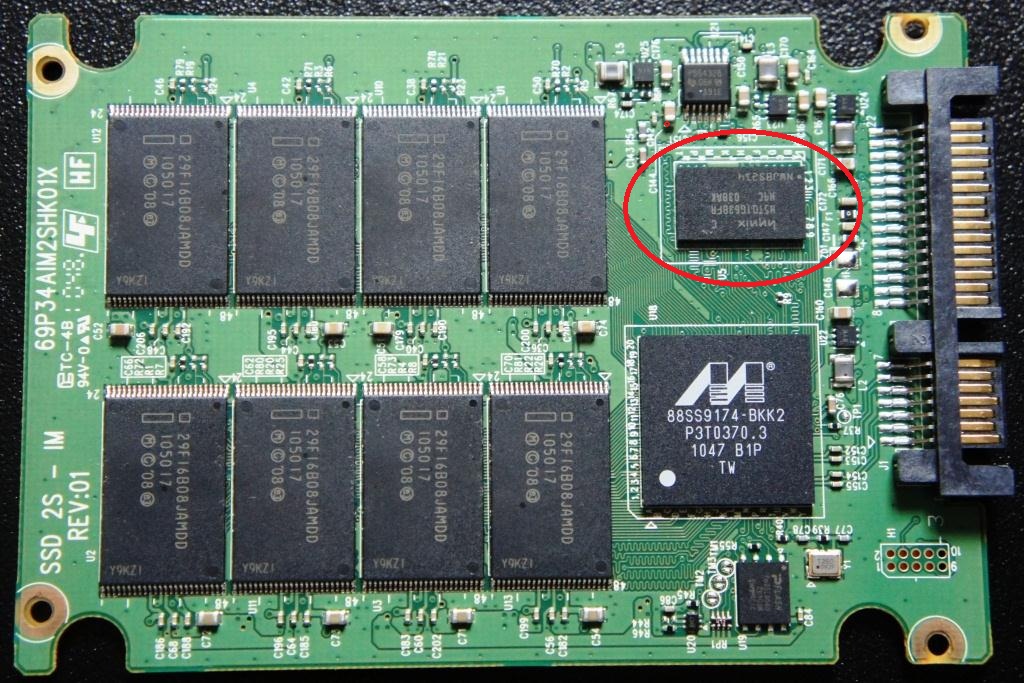

- VMFS datastores running on consumer-grade NVMe drives

- vSAN datastore running on consumer-grade NVMe drives, (1) disk group per server

- Synology Diskstation 1621xs+ – 30 TB of useable space

In terms of license requirements; I cannot stress enough how incredible the VMUG Advantage subscription is for obtaining real software licensing to run VMware solutions. It is arguably the most “bang for your buck” in terms of software you will purchase in your VMware homelab project. For around $200 (you can find coupons most of the year), you can access the full suite of VMware solutions, including vSphere, NSX-T, VMware Horizon, vRealize Automation, vRealize Operations, etc.

The VMUG Advantage subscription is how I started with legitimate licensing in the VMware home lab environment and have maintained a VMUG Advantage subscription ever since. You can learn more about the VMUG advantage subscription here: » VMUG Advantage Membership.

I used Microsoft Evaluation center licensing for Windows, suitable for 180 days, generally long enough for most of my lab scenarios.

What software am I running?

The below list is only an excerpt, as there are too many items, applications, and solutions to list. As I mentioned, my lab is built on top of VMware solutions. In it, I have the following running currently:

- vSphere 7.0 Update 3d with latest updates

- vCenter Server 7.0 U3d with the latest updates

- vSAN 7.0 Update 3

- vRealize Operations Manager

- vRealize Automation

- vRealize Network Insight

- VMware NSX-T

- Currently using Windows Server 2022 templates

- Linux templates are Ubuntu Server 21.10 and 20.04

- Running Gitlab and Jenkins for CI/CD

- I have a CI/CD pipeline process that I use to keep VM templates updated with the latest builds

- I have written a post on that here: Automated Packer VMware vSphere templates with CI/CD Pipeline Build

Nested vSphere labs:

- Running vSAN nested labs with various configurations

- Running vSphere with Tanzu with various containers on top of Tanzu

- Running Rancher Kubernetes clusters

Do I leverage the cloud?

Even though I have a VMware homelab project, I do leverage the cloud. For example, I have access to AWS and Azure and often use these to build out PoC environments and services between my home lab and the cloud to test real-world scenarios for hybrid cloud connectivity for clients and learning purposes.

What does your roadmap look like?

I am constantly looking at new hardware and better equipment across the board on the hardware roadmap. It would be nice to get 25 gig networking in the lab environment at some point in the future. Also, I am looking at new Supermicro models with the refreshed Ice Lake Xeon-D processors.

On the software/solutions side, I am on a continuous path to learning new coding and DevOps skills, including new Infrastructure-as-Code solutions. Also, Kubernetes is always on my radar, and I continue to use the home lab to learn new Kubernetes skills. I want to continue building new Kubernetes solutions with containerized workloads in the home lab environment, which is on the agenda this year in the lab environment.

Any horror stories to share?

One of the more memorable homelab escapades involved accidentally wiping out an entire vSAN datastore as I had mislabeled two of my Supermicro servers. So, when I reloaded two of the servers, I realized I had rebuilt the wrong servers. Thankfully, I am the CEO, CIO, and IT Manager of the home lab environment, and I had backups of my VMs

I like to light up my home lab server rack

One of the recent additions to the VMware homelab project this year has been the addition of LED lights. I ran LED light strips along the outer edge of my server rack and can change the color via remote or have the lights cycle through different colors on a timer. You can check out a walkthrough of my home lab environment (2022 edition with lights) here: (574) VMware Home Lab Tour 2022 Edition Server Room with LED lights at night! A geek’s delight! – YouTube

Rack servers for myVMware homelab project

Xavier Avrillier

VMware | DOJO Author & Section Editor

Why do you have a lab?

When I started my career in IT, I didn’t have any sort of lab and relied exclusively on the environment I had at work to learn new things and play around with tech. This got me started with running virtual machines in VMware workstations at home but computers back then (10 years ago) didn’t come with 16GB of RAM as a common requirement so I had to get crafty with resources.

When studying to take the VCP exam, things started to get a bit frustrating as running a vCenter with just 2 vSphere nodes on 16 GB of ram is cumbersome (and slow). At this point, I got lucky enough that I could use a fairly good test environment at work to delay the inevitable and manage to get the certification without investing a penny in hardware or licenses.

I then changed my employer and started technical writing so I needed the capacity to play around with and resources pile up fast when you add vSAN, NSX, SRM and other VMware products into the mix. For that reason, I decided to get myself a homelab that would be dedicated to messing around. I started with Intel NUC mini-PCs like many of us and then moved to a more solid Dell rack server that I am currently running.

I decided to go the second-hand route as it was so much cheaper and I don’t really care about official support, newer software usually works unless on dinosaur hardware. I got a great deal on a Dell R430, my requirements were pretty easy as I basically needed lots of cores, memory, a fair amount of storage and an out-of-band card for when I’m not at home and need to perform power actions on it.

What’s your setup?

I am currently running my cluster labs nested on the R430 and run natively in VMs when possible. For instance, I have the DC, NSX manager, VCD, and vCenter run in VMs on the physical host, but I have a nested VSAN cluster with NSX-T networking managed by this same vCenter server. This is the most consolidated way I could think of while offering flexibility.

- Dell R430

- VMware vSphere ESXi 7 Update 3

- 2 x Intel Xeon E5-2630 v3 (2 x 8 pCores @2.40GHz)

- 128GB of RAM

- 6 x 300GB 15K rpm in RAID 5 (1.5TB raw)

- PERC H730 mini

- Dual 550W power supply (only one connected)

- iDRAC 8 enterprise license

- I keep the firmware up to date with Dell OME running in a VM in a workstation on my laptop that I fire up every now and again (when I have nothing better to do).

On the side, I also have a Gigabyte mini-pc running. That one is installed with an Ubuntu server with K3s running on it (Kubernetes). I use it to run a bunch of home automation stuff that are managed by ArgoCD in a private Github repository (GitOps), that way I can track my change through commits and pull requests. I also use it for CAPV to quickly provision Kubernetes (and Tanzu TCE) clusters in my lab.

- Gigabyte BSi3-6100

- Ubuntu 20.04 LTS

- Core i3 6th gen

- 8GB of ram

I also have an old Synology DS115j NAS (Network Access Storage) that participates in the home automation stuff. It is also a target for vCenter backups and a few VMs I don’t want to have to rebuild using Altaro VM backup. It’s only 1TB but I am currently considering my options to replace it with a more powerful model with more storage.

Network wise all the custom stuff happens nested with OpnSense and NSX-T, I try to keep my home network as simple as possible if I don’t need to complicate it any further.

I currently don’t leverage any cloud services on a daily basis but I spin up the odd instance or cloud service now and again to check out new features or learn about new tech in general.

I try to keep my software and firmware as up-to-date as possible. However, it tends to depend on what I’m currently working on or interested in. I haven’t touched my Horizon install in a while but I am currently working with my NSX-T + ALB + VCD + vSAN setup to deploy a Kubernetes cluster with Cluster API.

“VMware homelab project architecture”

What do you like and don’t like about your setup?

I like that I have a great deal of flexibility by having a pool of resources that I can consume with nested installs or natives VMs. I can scratch projects and start over easily.

However, I slightly underestimated storage requirements and 1.5TB is proving a bit tricky as I have to really keep an eye on it to avoid filling it up. My provisioning ratio is currently around 350% so I don’t want to hit the 100% used space mark. And finding spare 15K SAS disks isn’t as easy as I’d hope.

What does your roadmap look like?

As mentioned, I’m reaching a point where storage can become a bottleneck as interoperable VMware products require more and more resources (NSX-T + ALB + Tanzu + VCD …). I could add a couple of disks but that would only add 600GB of storage and I’ll have to find 15K rpm 300GB disks with caddies so not an easy find. For that reason, I’m considering getting a NAS that I can then use as NFS or iSCSI storage backend with SSDs.

Things I am currently checking out include VMware Cloud Director with NSX-T and ALB integration and Kubernetes on top of all that. I’d also like to get in touch with CI/CD pipelines and other cloud-native stuff.

Any horror stories to share?

The latest to date was my physical ESXi host running on a consumer-grade USB key plugged in the internal USB port, which got fried (the USB key) after a few months of usage. My whole environment was running on this host and I had no backup then. But luckily, I was able to reinstall it on a new USB key (plugged in the external port) and re-register all my resources one by one manually.

Also, note that I am incredibly ruthless with my home lab. I only turn it on when needed. So, when I am done with it, none of that proper shutdown sequence, thanks very much. I trigger the shut down of the physical host from vCenter which takes care of stopping the VMs, sometimes I even push the actual physical button (yes there’s one). While I haven’t nuked anything that way somehow, I would pay to see my boss’s face should I stop production hypervisors with the button!

Ivo Beerens

Why do you have a lab?

The home lab is mainly used for learning, testing new software versions, and automating new image releases. Back when I started down this journey, my first home lab was in the Novell Netware 3.11 era which I acquired using my own money, no employer’s subvention

My main considerations and decision points for what I decided to purchase were low noise, low power consumption for running 24×7, room for PCI-Express cards and NVMe support.

What’s your setup?

From a hardware standpoint, computing power is handled by two Shuttle barebone machines with the following specifications:

- 500 W Plus Silver PSU

- Intel Core i7 8700 with 6 cores and 12 threads

- 64 GB memory

- Samsung 970 EVO 1 TB m.2

- 2 x 1 GbE Network cards

- Both barebones are running the latest VMware vSphere version.

In terms of storage, I opted for a separate QNAP TS-251+ NAS with two Western Digital (WD) Red 8 TB disks in a RAID-1 configuration. The barebones machines have NVM drives with no RAID protection.

The bulk of my workloads are hosted on VMware vSphere and for the VDI solution, I run VMware Horizon with Windows 10/11 VDIs. Cloud-wise, I use an Azure Visual Studio subscription for testing IAAS and Azure Virtual Desktop services.

I manage the environments by automating as much as possible using Infrastructure as Code (IaC). I automated the installation process of almost every part so I can start over from scratch whenever I want.

What do you like and don’t like about your setup?

I obviously really enjoy the flexibility that automation brings to the table. However, the lack of resources sometimes (max 128 GB) can sometimes be a limiting factor. I also miss having remote management boards such as HPE iLO, Dell iDRAC or a KVM switch to facilitate hardware operations.

What does your roadmap look like?

I currently have in the works to upgrade to a 10 GbE Switch and bump the memory to 128GB per barebone.

Paolo Valsecchi

Why do you have a lab?

I am an IT professional and I often find myself in the situation of implementing new products and configurations without having the right knowledge or tested procedures at hand. Since it is a bad idea to experiment with things directly on production environments, having a lab is the ideal solution to learn, study, and practice new products or test new configurations without the hassle of messing up critical workloads.

Because I’m also a blogger, I study and test procedures to publish them on my blog. This required a better test environment than what I had. Since my computer didn’t have enough resources to allow complex deployments, in 2015 I decided to invest some money and build my own home lab.

It was clear that the ideal lab was not affordable due to high costs. For that reason, I decided to start with a minimum set of equipment to extend later. It took a while before finding the configuration that met the requirements. After extensive research on the Internet, I was finally able to complete the design by comparing other lab setups.

My requirements for the lab were simple: Low power, cost-effective hardware, acceptable performance, at least two nodes, one external storage, compatibility with the platforms I use, and components size.

What’s your setup?

Despite my lab still meeting my requirements, it is starting to be a little bit obsolete now. My current lab setup is the following:

- PROD Servers: 3 x Supermicro X11SSH-L4NF

- Intel Xeon E3-1275v5

- 64GB RAM

- 2TB WD Red

- DR Server: Intel NUC NUC8i3BEH

- Intel Core i3-8109U

- 32GB RAM

- Kingston SA1000M8 240G SSD A1000

- Storage PROD: Synology DS918

- 12TB WD Red RAID5

- 250GB read/write cache

- 16GB RAM

- Storage Backup: Synology DS918

- 12TB WD Red RAID5

- 8GB RAM

- Storage DR: Synology DS119j + 3TB WD Red

- Switch: Cisco SG350-28

- Router: Ubiquiti USG

- UPS: APC 1400

The lab is currently composed of three nodes cluster running VMware vSphere 7.0.2 with vSAN as main storage. Physical shared storage devices are configured with RAID 5 and connected to vSphere or backup services via NFS or dedicated LUNs.

Installed Windows Servers are running version 2016 or 2019 while Linux VMs belong to different distributions and versions may vary.

My lab runs different services, such as:

- VMware vSphere and vSAN

- Active Directory, ADFS, Office 365 sync

- VMware Horizon

- Different backup solutions (at least 6 different products including Altaro)

In terms of Cloud service, I use cloud object storage (S3 and S3-compatible) solutions for backup purposes. I also use Azure to manage services such as Office 365, Active Directory and MFA. Due to high costs, workloads running on AWS or Azure are just created on-demand and for specific tests.

I try to keep the software always up-to-date with in-place upgrades, except for Windows Server which I always reinstall. Only once did I have to wipe the lab due to hardware failure

What do you like and don’t like about your setup?

With my current setup, I’m able to run the workloads I need and do my tests. Let’s say I’m satisfied with my lab, but…

vSAN disks are not SSD (only the cache), RAM installed on each host is limited to 64GB and the network speed is 1GB. These constraints are affecting the performance and the number of running machines that are demanding always more and more resources.

What does your roadmap look like?

To enhance my lab, the replacement of HDDs with SSDs is the first step in my roadmap. Smaller physical servers to better fit in my room as well as a 10 Gbps network would be the icing on the cake. Unfortunately, this means replacing most of the installed hardware in my lab.

Any horror stories to share?

After moving my lab from my former company to my house, the original air conditioning system in use during the very first days was not so good and a hot summer was fatal to my hardware… the storage with all my backups failed, losing a lot of important VMs. Pity that some days before I deleted such VMs from the lab. I spent weeks re-creating all the VMs! I have now a better cooling system and a stronger backup (3-2-1!)

Mayur Parmar

Why do you have a lab?

I use my Home LAB primarily for testing various products to explore new features and functionality that I’d never played with before. This greatly helps me in learning about the product as well as testing it.

I decided to go for a Home Lab 4 years ago because of the complete flexibility and control you have over your own environment. You can easily (or not) deploy, configure and manage things yourself. I bought my Dell Workstation directly from Dell by customizing its configuration according to my needs and requirements.

The first thing I considered was whether it should be bare metal with Rack servers, Network Switches and Storage devices or simply nested virtualization inside VMware Workstation. I went for the nested virtualization route for flexibility and convenience and sized the hardware resources according to what I needed at the time.

What’s your setup?

My home lab is pretty simple, it is made up of a Dell Workstation, a TP link switch and a Portable hard drive.

Dell Workstation:

- Dell Precision Tower 5810

- Intel Xeon E5-2640v4 10 Core processor

- 96 GB of DDR4 Memory

- 2x1TB of SSDs

- 2 TB of Portable hard drive

- Windows 10 with VMware Workstation

At the moment I currently run a variety of VMs such as ESXi hosts, AD-DNS, Backup software, a mail server and a number of Windows and Linux boxes. Because all VMs running on VMware Workstation there is no additional network configuration required as all VMs can interact with each other on virtual networks.

Since my Home LAB is on VMware Workstation it gives the flexibility to keep up-to-date versions as well as lower versions to test and compare features for instance. Because it runs in VMware Workstation, I often got to wipe out and recreate the complete setup. Whenever newer versions are released, I always upgrade to try out new features.

What do you like and don’t like about your setup?

I like the flexibility VMware Workstation gives me to set things up easily and scratch them just as easily.

On the other hand, there is a number of things I can’t explore such as setting up solutions directly on the physical server, working on Firmware, Configuring Storage & RAID levels, Configure Networking, routing and so on.

What does your roadmap look like?

Since I bought my Dell Workstation, I constantly keep an eye on the resources to avoid running out of capacity. In the near future, I plan to continue with that trend but I am considering buying a new one to extend the capacity.

However, I am currently looking at buying a NAS device to provide shared storage capacity to the compute node(s). While I don’t use any just now, my future home lab may include cloud services at some point.

Any horror stories to share?

A couple of mistakes I made in the home lab included failure to create DNS Records before deploying a solution, messed up vCenter Upgrade which required to deploying new vCenter servers or a failed Standard Switch to Distributed Switch migration which caused network outage and needed to reset the whole networking stack.

Simon Cranney

Why do you have a lab?

A couple of years ago I stood up my first proper VMware home lab project. I had messed about with running VMware Workstation on a gaming PC in the past, but this time I wanted something I could properly get my teeth into and have a VMware vSphere home lab without resource contention.

Prior to this, I had no home lab. Many people that are fortunate to work in large enterprise infrastructure environments may be able to fly under the radar and play about with technologies on works hardware. I cannot confirm nor deny if this was something I used to do! But hey learning and testing new technologies benefits the company in the long run.

What’s your setup?

Back to the current VMware home lab then, I had a budget in mind so ended up going with a pair of Intel NUC boxes. Each with 32 GB RAM and a 1 TB PCIe NVMe SSD.

The compute and storage are used to run a fairly basic VMware vSphere home lab setup. I have a vCenter Server as you’d expect, a 2-node vSAN cluster, and vRealize Operations Manager, with a couple of Windows VMs running Active Directory and some different applications depending on what I’m working on at any given point in time.

My VMware home lab licenses are all obtained free of charge through the VMware vExpert program but there are other ways of accessing VMware home lab licenses such as through the VMUG Advantage membership or even the vSphere Essentials Plus Kit. If you are building a VMware home lab though, why not blog about it and shoot for the VMware vExpert application?

In terms of networking, I’ve put in a little more effort! Slightly out of scope here but in a nutshell;

- mini rack with the Ubiquiti UniFi Dream Machine Pro

- UniFi POE switch

- And a number of UniFi Access Points providing full house and garden coverage

I separate out homelab and trusted devices onto an internal network, partner and guest devices onto an external network, and smart devices or those that like to listen onto a separate IoT network. Each network is backed by a different VLAN and associated firewall rules.

What do you like and don’t like about your setup?

Being 8th Generation, the Intel NUC boxes caused me some pain when upgrading to vSphere 7. I used the Community Network Driver for ESXi Fling and played about adding some USB network adapters to build out distributed switches.

I’m also fortunate enough to be running a VMware SD-WAN (VeloCloud) Edge device, which plugs directly into my works docking station and optimizes my corporate network traffic for things like Zoom and Teams calls.

What does your roadmap look like?

In the future, I’d like to connect my VMware home lab project to some additional cloud services, predominantly in AWS. This will allow me to deep dive into technologies like VMware Tanzu, by getting hands-on with the deployment and configuration.

Whilst VMware Hands-on Labs are an excellent resource, like many techies I do find that the material sticks and resonates more when I have had to figure out integrations and fixes in a real-life environment. I hope you found my setup interesting. I’d love to hear in the comments section if you’re running VMware Tanzu in your home lab and from any other UniFi fans!

Get More Out of Your Homelab

It is always fun to discuss home labs and discover how your peers do it. It’s a great way to share “tips and tricks” and to learn from the success and failures of others. Hardware is expensive and so is electricity, real estate to store it and so on.

Learn how to design on a budget for the VMware homelab building process

For these reasons and many others, you should ask yourself a few questions before even looking at home lab options to better steer your research towards something that will fit your needs:

- Do I need hardware, Cloud services or both? On-premise hardware involves investing a chunk of money at the beginning but it means you are in total control of the budget as electricity will be the only variable from now on. On the other hand, cloud services will let you pay for only what you use. It can be very expensive but it could also be economical under the right circumstances. Also, some of you will only require Azure services because it’s your job, while I couldn’t run VMware Cloud Director, NSX-T and ALB in the cloud.

- Do you have limited space or noise constraints? Rack and tower servers are cool, but they are bulky and loud. A large number of IT professionals went for small, passive and silent mini-pcs such as Intel NUC. It grew in popularity after William Lam from VMware endorsed it and network drivers for the USB adapters were released as Flings. These small form factor machines are great and offer pretty good performances with i3, i5 or i7 processors. You can get a bunch of these to build a cluster that won’t use up much energy and won’t make a peep.

- Nested or Bare-Metal? Another question that is often asked is if you should run everything bare-metal. I personally like the flexibility of nested setups but it’s also because I don’t have the room for a rack at home (and let’s face it, I would get bad looks!). However, as you saw in this blog, people go for one or the other for various reasons and you will have to find yours.

- What do you want to get out of it? If you are in the VMware dojo, you most likely are interested in testing VMware products. Meaning vSphere will probably be your go-to platform. In which case you will have to think about licenses. Sure, you can use evaluation licenses but you’ll have to start over every 60 days, not ideal at all. The vExpert program and the VMUG advantage program are your best bets in this arena. On the other hand, if you are only playing with Open-source software you can install Kubernetes, OpenStack or KVM on bare metal for instance and you won’t have to pay for anything.

- How much resources do you need? This question goes hand in hand with the next one. While playing around with vSphere, vCenter or vSAN won’t set you back that much. If you want to get into Cloud Director, Tanzu, NSX-T and the likes, you will find that they literally eat up CPU, memory and storage for breakfast. So, try to look at the resource requirements for the products you want to test in order to get a rough idea of what you will need.

- What is your budget? Now the tough question, how much do you want to spend? In hardware and energy (which links back to small form factor machines)? It is important to set yourself a budget and not just start buying stuff for the sake of it (unless you have the funds). Home lab setups are expensive and, while you might get a 42U rack full of servers for cheap on the second-hand market, your energy bill will skyrocket. On the other hand, getting a very cheap setup will cost you a certain amount of money but you may not get anything from it due to hardware limitations. So set yourself a budget and try to find the sweet spot.

- Check compatibility: Again, don’t jump in guns blazing at the first offer. Double-check that the hardware is compatible with whatever you want to evaluate. Sure, it is likely to work even if it isn’t in the VMware HCL, but it is always worth it to do your research to look for red flags before buying.

Those are only a few key points I could think of but I’d be happy to hear about yours in the comments!

Is a VMware Homelab Worth it?

We think that getting a home lab is definitely worth it. While the money aspect might seem daunting at first, investing in a home lab is investing in yourself. The wealth of knowledge you can get from 16 cores/128GB servers is lightyears away from running VMware Workstation on your 8 cores/16GB laptop. Even though running products in a lab isn’t real-life experience, this might be the differentiating factor that gets you that dream job you’ve been after. And once you get it, the $600 you spent for that home lab will feel like money well spent with a great ROI!

VMware Homelab Alternatives

However, if your objective is to learn about VMware products in a guided way and you are not ready to buy a home lab just yet for whatever reason, fear not, online options are there for you! You can always start with the VMware Hands-on-labs (HOL) which offers a large number of learning paths where you can get to grips with most of the products sold by the company. Many of them you couldn’t even test in your home lab actually (especially the cloud ones like carbon black or workspace one). Head over to https://pathfinder.vmware.com/v3/page/hands-on-labs and register to Hands-on-labs to start learning instantly.

The other option to run a home lab for cheap is to install a VMware workstation on your local workstation if you have enough resources. This is, in almost 100% of the cases, the first step before moving to a more serious and expensive setup.

To protect your VMware environment, Altaro offers the ultimate VMware backup service to secure backup quickly and replicate your virtual machines. We work hard perpetually to give our customers confidence in their backup strategy.

Plus, you can visit our VMware blog to keep up with the latest articles and news on VMware.

What Homelab Set Up is Right for You?

I think we will all agree that our work doesn’t fit within the traditional 9-to-5 as keeping our skills up is also part of the job and it can’t always be done on company time. Sometimes we’ll be too busy or it might just be that we want to learn about something that has nothing to do with the company’s business. Home labs aren’t limited to VMware or Azure infrastructure and what your employer needs. You can put them to good use by running overkill wifi infrastructures or by managing your movie collection with an enterprise-grade and highly resilient setup that many SMBs would benefit from. The great thing about it is that it is useful on a practical and personal level while also being good fun (if you’re a nerd like me).

Gathering testimonies about VMware homelab projects and discussing each other’s setup has been a fun and very interesting exercise. It is also beneficial to see what is being done out there and identify ways to improve and optimize our own setup, I now know that I need an oversized shared storage device in my home (This will be argued)!

Now we would love to hear about your VMware homelab project that you run at home, let’s have a discussion in the comments section!

Source :

https://www.altaro.com/vmware/perfect-homelab-vmware/