Something has to be readable for machines and humans to understand it, right? Easy-to-read content has a greater chance of success as more people tend to understand it quickly. The same goes for machines — search engines rely on structured data to help them understand the meaning of your pages. In Yoast SEO 19.3, we’re bringing readability improvements to both humans and machines.

Schema structured data in Yoast SEO 19.3

You probably know the importance of structured data — search engines use it to grasp your content. They use those insights to determine if your content is valid for a rich result, visually highlighting it in the search results. But schema does other things as well.

A better way to handle images in the schema

In Yoast SEO 19.3, we’re improving how we handle images in our schema. If you want the proper pictures to show on your different output channels, you must be sure that search engines can find the right ones. We’ve changed the way we handled this.

At first, we relied on the OpenGraph image and Twitter image. The thing is, these often contain text to help them stand out on social media. On Google Discover, text on an image is not helpful and might hinder the performance of your post. Now, we output the textless featured image as the initial image for search engines to use. The main benefit is that services like Google Discover can use the right image — making your content shine! It increases the chance that your content will do well on Google Discover.

More robust handling of the webpage’s schema id

Yoast SEO comes with a thorough structured data implementation. From the start, we’ve been advocating using the id to tie all the different parts of a site together in one schema graph. In Yoast SEO 19.3, we’re improving how we handle the @id of the main schema WebPage node to be just the permalink for the current page. Doing this makes it easier for other plugins to build on our work.

Read our schema developer documentation to learn about our schema philosophy and best practices.

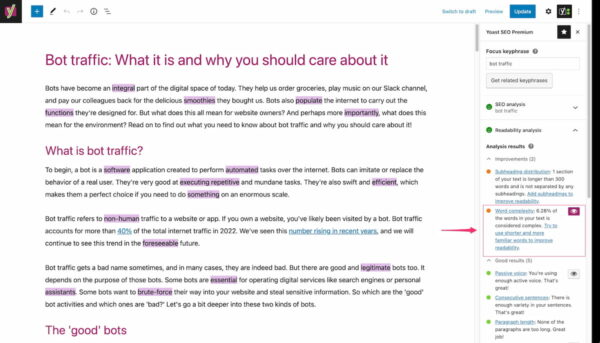

Yoast SEO Premium: New word complexity assessment to grade content

The readability analysis in Yoast SEO helps you to write content that is easy to read and quick to understand. We see excellent readability as a fundamental human right online. Sometimes, people accuse us of dumbing down content, but we like to turn that around — by making your content easier to read, you open it up for a lot more people.

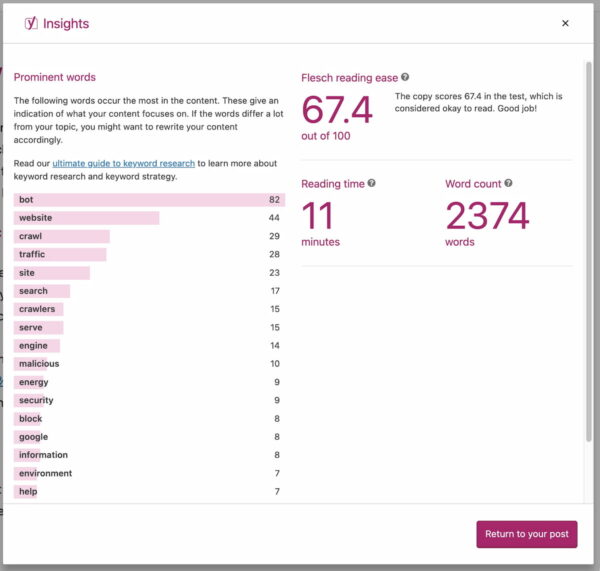

For years, we used the Flesch Reading Easy score to give you a sense of how difficult a text would be to understand for users of different levels. This reading score works well, but it’s hard to make it more actionable. We’re introducing a new word complexity analysis that scans your content to see if you use too many complex words in your text.

Go Premium and get access to all our features!

Premium comes with lots of features and free access to our SEO courses!

Get Yoast SEO Premium »Only €99 EUR / year (ex VAT)

Word complexity is in beta and English only for now

One of the advantages of the complex word assessment is that it’s actionable. We can mark words that are complex according to our definition. The words we recognize as complex are, for the most part, complicated words that you might want to reconsider. By marking them in the text, you can easily change these to a more common alternative.

Of course, some words aren’t that difficult, but we still highlight them. Also, you might be in a situation where your keyphrase is considered a complex word. In rare cases, you might get a bit of duality in the feedback. That is one of the reasons we’re releasing the word complexity feature in Yoast SEO Premium beta and for English only.

Flesch Reading Ease score moved to Insights tab

In Yoast SEO 19.3, you’ll notice that the Flesch Reading Ease score is no longer available in the readability section as it’s been replaced by the word complexity feedback. We haven’t removed it, but we’ve moved it to the Insights tab. Here, you’ll find the score and some other excellent insights into your content, like the word count, reading time, and the prominent words feature.

Enhancement to the crawl settings

The past two releases of Yoast SEO Premium saw the introduction and expansion of our new crawl settings. With these crawl settings, you can get better control over what search engines crawl and don’t crawl on your site. This is designed to help you decrease the baggage that WordPress comes with out of the box.

We’re not done with the crawl settings because we have many ideas to improve and expand these. In Yoast SEO Premium 18.9, we’re improving the handling of RSS feeds. We now add canonical HTTP headers from RSS feeds to their parent URLs (for instance, your homepage or specific categories or tags), so the feeds are less likely to appear in search results.

Update now to Yoast SEO 19.3

This is just a sampling of the changes and fixes to Yoast SEO 19.3. We have structured data updates, a new word complexity assessment in Yoast SEO Premium 18.9, improvements to the crawl settings, and more. Go download it now!

Source :

https://yoast.com/yoast-seo-july-12-2022/

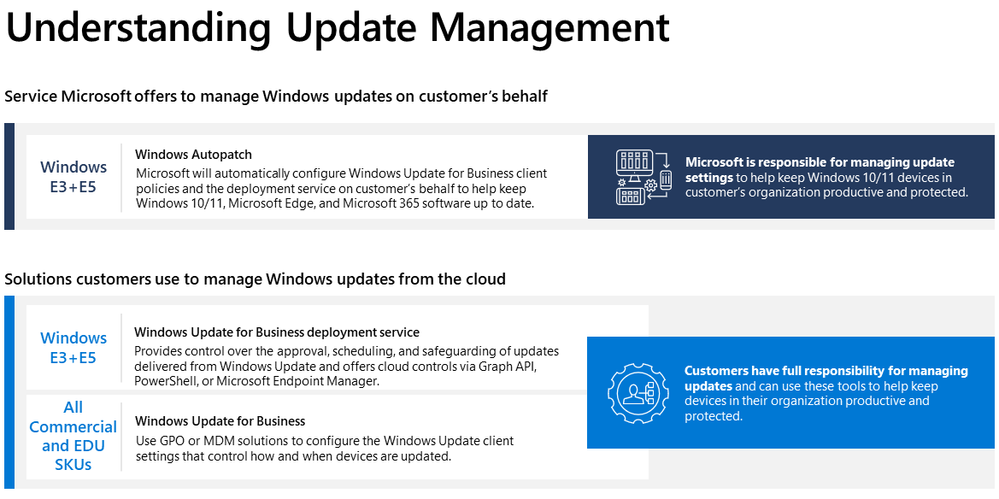

Windows Autopatch is a service that uses the Windows Update for Business solutions on your behalf.

Windows Autopatch is a service that uses the Windows Update for Business solutions on your behalf.